Wikipedia:Ambassadors/Research/Article quality/Results

Spring 2012 United States and Canada student article quality research results

[edit]To measure the impact of student work on article quality in the U.S. and Canada Education Programs, a group of Wikipedians led by Mike Christie repeated the article assessment study from the Public Policy Initiative for the Spring 2012 classes (which were active between January and May). 124 of the articles that students worked on were assessed on a 26-point quality scale that draws directly from the Wikipedia 1.0 assessment criteria (Stub/Start/C/B/GA/A/FA). This included 82 existing articles and 42 new articles. Of the 124 articles, 109 of them showed improvement after student work, meaning 87.9% of articles were improved by student edits.

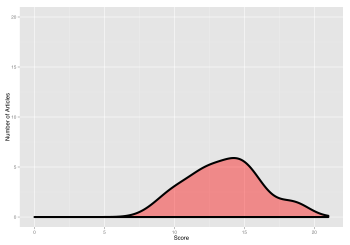

Blue: Quality distribution before student work

Pink: Quality distribution after student work

On average, existing articles improved by 2.94 points—from 11.26 to 14.20—while for new articles students averaged a score of 13.55. In rough practical terms, the average class started from either nonexistent articles or (typically) weak Start-class articles, and ended up with C-class articles or strong Start-class articles. Altogether (new and existing articles combined), on average students improved articles by about 6.5 points, which is 0.7 points more than the average improvements made by students in the Public Policy Initiative.

Existing articles in the sample improved by up to ten points, with 7 cases of no substantial improvement (typically, cases where either the selected student did not get past the early stages of the Wikipedia assignment or whose assignment was to do copyediting or other minor changes), and 8 cases in which the article quality actually declined. In the sample, the worst student article declined by 2 points. These made up a small minority of student articles—as mentioned earlier, 87.9% of the reviewed articles improved in quality.

In a followup to try to gauge the level of "editor impact"—the cleanup effort from experienced editors involved in cleaning up and fixing problems with students' work—an invitation to an on-wiki survey was placed on the talk page of each of the 124 assessed articles, after the courses ended. Of those, only 13 garnered responses. 6 reported little or no cleanup required, a few required around half an hour of work, one editor reported spending considerably longer than an hour trying to fix a student's work, and another editor pointed to major verifiability problems that would take longer than an hour to clean up. On the whole, the study did not find evidence of a severe editor burden. We continue to welcome ideas for a better way of measuring editor impact.

Article selection methodology

[edit]To get a representative sample of articles from across the education program, articles were selected as follows:

- For each participating class, two articles were selected if the class had 20 or fewer students, and three if 21 or more students.

- Using the Course Info tool, the alphabetically first and last student in each class (among those who made at least one mainspace edit) were chosen as the representives of each class. For classes with more than 20 students, a student in the approximate middle of the alphabetical list was selected as a third article.

- In cases where a student edited more than one article, LiAnna Davis used the student's contribution history to identify the main article that student worked on. (In many cases, students made minor edits to articles as part of their introduction to Wikipedia, before choosing a different article as the main focus of their Wikipedia assignment, or participated in peer editing of a classmate's article.)

- "Pre" and "Post" versions of the article were identified as the last revision before students made their first edit to the article, and the last revision by a student, professor, or Ambassador working with that class during the class term, respectively.

- Classes focused on making contributions to other Wikimedia projects (such as students who uploaded videos to Commons) were not included.

Note: The data analysis and production of graphs were done by Luis Campos, an external consultant. Luis is a data analyst and holds a Master's degree in Statistics from the University of California at Berkeley.

Note: While this set is a representative random sample of the articles that classes were editing, it can't be directly extrapolated to estimate the total quality improvement for all articles. In some classes, students edited collaboratively, focusing on just a few articles as a group. In such cases, the two articles for the class represent all or most of the work of the entire class. In other classes, each student worked on one (or more) articles, meaning the two articles in the sample are only a small fraction of the work students in that class did.

- Original data sets

The Wikipedian reviews are available on these four pages:

- http://en.wiki.x.io/wiki/Wikipedia:Ambassadors/Research/Article_quality/Completed

- http://en.wiki.x.io/wiki/Wikipedia:Ambassadors/Research/Article_quality/Priority_2

- http://en.wiki.x.io/wiki/Wikipedia:Ambassadors/Research/Article_quality/Priority_3

- http://en.wiki.x.io/wiki/Wikipedia:Ambassadors/Research/Article_quality/Priority_4