Wikipedia:AfD stats don't measure what you think

This is an essay. It contains the advice or opinions of one or more Wikipedia contributors. This page is not an encyclopedia article, nor is it one of Wikipedia's policies or guidelines, as it has not been thoroughly vetted by the community. Some essays represent widespread norms; others only represent minority viewpoints. |

Editors' Articles for Deletion stats are frequently misused as a measure of their contributions to WP:AFD, or even to Wikipedia overall. This essay identifies two flawed assumptions behind that misuse.

Context

[edit]The AfD statistics tool gives a summary variable of how often an editor's votes at Articles for Deletion match the outcome of the Articles for Deletion discussion. As an example see this user's afdstats. This figure is often used in gauging how closely aligned an editor's contributions tend to be with the contributions of other editors. The more frequently their !votes match the outcome, the more they are perceived as being aligned with the community. Even when this exact summary statistic is not used, it's common for editors to page through other editors' AfDs to check manually how often their !vote matches the results of the AfD. To avoid putting specific people under the microscope, this essay does not provide specific examples of people using these stats in questionable ways, but it is easy to find examples of this happening not just in AfD disputes or on generic talk page discussions, but in the highest-stakes evaluations of users, like those that happen at WP:AN/I and even WP:RfA.

This use of AfD stats has two major problems. First, not all editors are !voting on the same AfDs, and some AfDs are fundamentally clearer than others. Second, though following WP:CONSENSUS is understood as the primary method for improving Wikipedia, AfD stats do not measure whether or not an editor followed consensus; they measure how successfully an editor has predicted consensus. And when it comes to AfD stats, the consensus that develops is not always normatively good, and those who dissented along the way to that consensus may often have been right. This essay elabourates on both of these problems.

Different AfDs are different

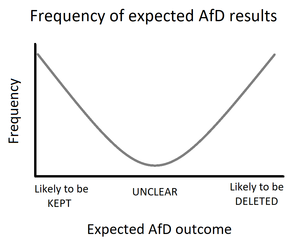

[edit]Imagine that, before a single !vote is cast, there is a distribution of how likely each AfD is to result in the page being deleted. It might look something like this (the actual distribution is not very important, this is just one example of how it might look):

Articles on the left of this curve are extremely likely to be kept, and articles on the right of this curve are extremely likely to be deleted. But articles in the middle of the curve are more ambiguous. Maybe these are cases that are just on the borderline of passing GNG, or maybe they fall in a grey area where even the most experienced wikilawyers would come to different conclusions about whether or not some type of assumed notability applies. Even highly experienced editors might cast very different !votes on these pages.

Now imagine two experienced, thoughtful, conscientious volunteers, who each have half an hour to spend !voting on AfDs. One of them likes to cast !votes that they are very comfortable with and very confident about. They scroll down the list of AfDs until they see a page that they think should obviously have one outcome or the other: a page either on the far left or the far right of the distribution. Even if that AfD currently has 3 or 4 !votes in one direction already, the editor carefully re-explains why policy dictates that the other !voters are correct. AfDs will nearly always resolve in their favour, and their afdstats will show that their !votes are in excellent agreement with consensus.

Now imagine instead an editor who is extremely careful about spending their half hour at AfD in ways that might really affect AfD outcomes. They scroll through the open AfDs until they find a page from the middle of the distribution, where it's anybody's guess how the AfD should turn out. They come to a carefully reasoned position grounded in policy and they cast a !vote that they know very well others might not agree with. Much of the time they persuade other editors to !vote the same way, but often they end up arguing the losing position. Their AfD stats will show a mixed bag.

But who spent their half hour more wisely? Informed !votes at AfD contribute to Wikipedia by affecting the population of pages: !votes should cause notable pages to remain on the encyclopedia and non-notable pages to be removed. An editor who picks useful discussions, who has a real chance of persuading other editors that the AfD should swing a certain way, is affecting the population of Wikipedia articles. Such an editor contributes more to the project than someone who tells other editors what they already know, and whose contributions barely change which pages are kept and which are deleted.

Predicting consensus can be bad

[edit]Following consensus is one of the core expectations of all Wikipedians. Consensus is described throughout Wikipedia's basic policies as the primary way that the website achieves the WP:Five pillars. Actively violating consensus after it has been reached is disruptive editing, and if someone is regularly breaking consensus, they probably are not an editor in good standing. So it is natural that editors who wish to assess the performance and contributions of another editor try to find measures of how much that editor falls in line with consensus. The crucial problem, though, is that afdstats are not a measure of how much another editor follows consensus, but rather they measure how well the editor predicts consensus (it is true that a higher agreement percentage might be associated with shaping the outcomes of AfDs, but a measure of the correlation between an editor's !votes and the result cannot show that the editor caused that consensus to be reached, especially since there are usually many !votes per AfD). AfD Editors are supposed to voice their opinions before there is a set consensus; that's how consensus gets built. It is no shame at all to have opposed a consensus before it was reached, so long as the editor does not disrupt the website by subsequently violating that consensus.

Of course, AfDs are not an exercise in developing consensus from scratch: AfD is tightly constrained as a consensus-building activity by many years of detailed deletion policies. It is true that if a user has an extremely low agreement percent (substantially below 50%) then this might reflect a deep misunderstanding of deletion policy, or a willful disobedience of existing policy, which could be causing them to consistently !vote against the grain. But agreement percentage alone is not a good enough reason to doubt a user's good faith or competence. To determine that someone is either willfully disruptive or dangerously ignorant of policy, as opposed to just consistently !voting in extremely borderline AfDs, would require actually examining the contents of their !votes, and has little to do with whether or not they tend to agree with the closing decision and the closing rationale.

There is also an even more acute problem: when it comes to AfD, predicting consensus might not be a good thing at all. Academic publications on the AfD process have shown that AfD has various biases, including a higher probability that women's pages are nominated and then deleted, even when some research has controlled for how notable the page subject is (see for example the analysis by Tripodi, and the literature cited in that paper). No editor should be proud of predicting an incorrect consensus, especially when we know that consensus will sometimes be biased or unfair.

The point

[edit]Not all consensuses are equally easy to predict, and highly productive styles of editing can actually result in lower agreement between a user's !votes and the AfD outcome. Matching consensus before it is reached is also not necessarily a good thing, and we shouldn't try to do it. Once consensus has been reached, to disobey it is disruptive, but on the way to consensus editors should emphatically not cast !votes that are simply attempts to predict the discussion's outcome. A true consensus will only emerge if editors voice their actual opinions, and we can only improve the project by trying to make tomorrow's consensus at least as good as today's.