Portal:Computer programming

Portal maintenance status: (September 2019)

|

The Computer Programming Portal

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...)

Selected articles - load new batch

-

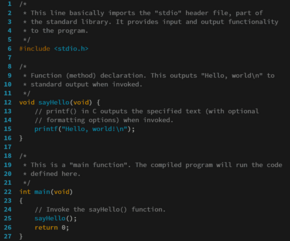

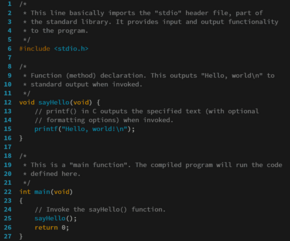

Image 1

The source code for a computer program in C. The gray lines are comments that explain the program to humans. When compiled and run, it will give the output "Hello, world!".

A programming language is a system of notation for writing computer programs.

Programming languages are described in terms of their syntax (form) and semantics (meaning), usually defined by a formal language. Languages usually provide features such as a type system, variables, and mechanisms for error handling. An implementation of a programming language is required in order to execute programs, namely an interpreter or a compiler. An interpreter directly executes the source code, while a compiler produces an executable program.

Computer architecture has strongly influenced the design of programming languages, with the most common type (imperative languages—which implement operations in a specified order) developed to perform well on the popular von Neumann architecture. While early programming languages were closely tied to the hardware, over time they have developed more abstraction to hide implementation details for greater simplicity.

Thousands of programming languages—often classified as imperative, functional, logic, or object-oriented—have been developed for a wide variety of uses. Many aspects of programming language design involve tradeoffs—for example, exception handling simplifies error handling, but at a performance cost. Programming language theory is the subfield of computer science that studies the design, implementation, analysis, characterization, and classification of programming languages. (Full article...) -

Image 2Prolog is a logic programming language that has its origins in artificial intelligence, automated theorem proving and computational linguistics.

Prolog has its roots in first-order logic, a formal logic, and unlike many other programming languages, Prolog is intended primarily as a declarative programming language: the program is a set of facts and rules, which define relations. A computation is initiated by running a query over the program.

Prolog was one of the first logic programming languages and remains the most popular such language today, with several free and commercial implementations available. The language has been used for theorem proving, expert systems, term rewriting, type systems, and automated planning, as well as its original intended field of use, natural language processing. (Full article...) -

Image 3

In computer programming, assembly language (alternatively assembler language or symbolic machine code), often referred to simply as assembly and commonly abbreviated as ASM or asm, is any low-level programming language with a very strong correspondence between the instructions in the language and the architecture's machine code instructions. Assembly language usually has one statement per machine instruction (1:1), but constants, comments, assembler directives, symbolic labels of, e.g., memory locations, registers, and macros are generally also supported.

The first assembly code in which a language is used to represent machine code instructions is found in Kathleen and Andrew Donald Booth's 1947 work, Coding for A.R.C.. Assembly code is converted into executable machine code by a utility program referred to as an assembler. The term "assembler" is generally attributed to Wilkes, Wheeler and Gill in their 1951 book The Preparation of Programs for an Electronic Digital Computer, who, however, used the term to mean "a program that assembles another program consisting of several sections into a single program". The conversion process is referred to as assembly, as in assembling the source code. The computational step when an assembler is processing a program is called assembly time.

Because assembly depends on the machine code instructions, each assembly language is specific to a particular computer architecture. (Full article...) -

Image 4Daguerreotype by Antoine Claudet (c. 1843)

Augusta Ada King, Countess of Lovelace (née Byron; 10 December 1815 – 27 November 1852), also known as Ada Lovelace, was an English mathematician and writer chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognise that the machine had applications beyond pure calculation.

Lovelace was the only legitimate child of poet Lord Byron and reformer Anne Isabella Milbanke. All her half-siblings, Lord Byron's other children, were born out of wedlock to other women. Lord Byron separated from his wife a month after Ada was born and left England forever. He died in Greece when she was eight. Lady Byron was anxious about her daughter's upbringing and promoted Lovelace's interest in mathematics and logic in an effort to prevent her from developing her father's perceived insanity. Despite this, Lovelace remained interested in her father, naming her two sons Byron and Gordon. Upon her death, she was buried next to her father at her request. Although often ill in her childhood, Lovelace pursued her studies assiduously. She married William King in 1835. King was made Earl of Lovelace in 1838, Ada thereby becoming Countess of Lovelace.

Lovelace's educational and social exploits brought her into contact with scientists such as Andrew Crosse, Charles Babbage, Sir David Brewster, Charles Wheatstone and Michael Faraday, and the author Charles Dickens, contacts which she used to further her education. Lovelace described her approach as "poetical science" and herself as an "Analyst (& Metaphysician)". (Full article...) -

Image 5

Rust is a general-purpose programming language emphasizing performance, type safety, and concurrency. It enforces memory safety, meaning that all references point to valid memory. It does so without a traditional garbage collector; instead, memory safety errors and data races are prevented by the "borrow checker", which tracks the object lifetime of references at compile time.

Rust does not enforce a programming paradigm, but was influenced by ideas from functional programming, including immutability, higher-order functions, algebraic data types, and pattern matching. It also supports object-oriented programming via structs, enums, traits, and methods. It is popular for systems programming.

Software developer Graydon Hoare created Rust as a personal project while working at Mozilla Research in 2006. Mozilla officially sponsored the project in 2009. In the years following the first stable release in May 2015, Rust was adopted by companies including Amazon, Discord, Dropbox, Google (Alphabet), Meta, and Microsoft. In December 2022, it became the first language other than C and assembly to be supported in the development of the Linux kernel. (Full article...) -

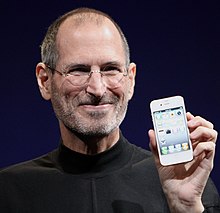

Image 6Jobs introducing the iPhone 4 in 2010

Steven Paul Jobs (1955 – 2011) was an American businessman, inventor, and investor best known for co-founding the technology company Apple Inc. Jobs was also the founder of NeXT and chairman and majority shareholder of Pixar. He was a pioneer of the personal computer revolution of the 1970s and 1980s, along with his early business partner and fellow Apple co-founder Steve Wozniak.

Jobs was born in San Francisco in 1955 and adopted shortly afterwards. He attended Reed College in 1972 before withdrawing that same year. In 1974, he traveled through India, seeking enlightenment before later studying Zen Buddhism. He and Wozniak co-founded Apple in 1976 to further develop and sell Wozniak's Apple I personal computer. Together, the duo gained fame and wealth a year later with production and sale of the Apple II, one of the first highly successful mass-produced microcomputers.

Jobs saw the commercial potential of the Xerox Alto in 1979, which was mouse-driven and had a graphical user interface (GUI). This led to the development of the largely unsuccessful Apple Lisa in 1983, followed by the breakthrough Macintosh in 1984, the first mass-produced computer with a GUI. The Macintosh launched the desktop publishing industry in 1985 (for example, the Aldus Pagemaker) with the addition of the Apple LaserWriter, the first laser printer to feature vector graphics and PostScript. (Full article...) -

Image 7In C++ computer programming, allocators are a component of the C++ Standard Library. The standard library provides several data structures, such as list and set, commonly referred to as containers. A common trait among these containers is their ability to change size during the execution of the program. To achieve this, some form of dynamic memory allocation is usually required. Allocators handle all the requests for allocation and deallocation of memory for a given container. The C++ Standard Library provides general-purpose allocators that are used by default, however, custom allocators may also be supplied by the programmer.

Allocators were invented by Alexander Stepanov as part of the Standard Template Library (STL). They were originally intended as a means to make the library more flexible and independent of the underlying memory model, allowing programmers to utilize custom pointer and reference types with the library. However, in the process of adopting STL into the C++ standard, the C++ standardization committee realized that a complete abstraction of the memory model would incur unacceptable performance penalties. To remedy this, the requirements of allocators were made more restrictive. As a result, the level of customization provided by allocators is more limited than was originally envisioned by Stepanov.

Nevertheless, there are many scenarios where customized allocators are desirable. Some of the most common reasons for writing custom allocators include improving performance of allocations by using memory pools, and encapsulating access to different types of memory, like shared memory or garbage-collected memory. In particular, programs with many frequent allocations of small amounts of memory may benefit greatly from specialized allocators, both in terms of running time and memory footprint. (Full article...) -

Image 8

Logo since August 17, 2012

Microsoft is a multinational computer technology corporation. Microsoft was founded on April 4, 1975, by Bill Gates and Paul Allen in Albuquerque, New Mexico. Its current best-selling products are the Microsoft Windows operating system; Microsoft Office, a suite of productivity software; Xbox, a line of entertainment of games, music, and video; Bing, a line of search engines; and Microsoft Azure, a cloud services platform.

In 1980, Microsoft formed a partnership with IBM to bundle Microsoft's operating system with IBM computers; with that deal, IBM paid Microsoft a royalty for every sale. In 1985, IBM requested Microsoft to develop a new operating system for their computers called OS/2. Microsoft produced that operating system, but also continued to sell their own alternative, which proved to be in direct competition with OS/2. Microsoft Windows eventually overshadowed OS/2 in terms of sales. When Microsoft launched several versions of Microsoft Windows in the 1990s, they had captured over 90% market share of the world's personal computers.

As of June 30, 2015, Microsoft has a global annual revenue of US$86.83 billion (~$109 billion in 2023) and 128,076 employees worldwide. It develops, manufactures, licenses, and supports a wide range of software products for computing devices. (Full article...) -

Image 9Programming languages are used for controlling the behavior of a machine (often a computer). Like natural languages, programming languages follow rules for syntax and semantics.

There are thousands of programming languages and new ones are created every year. Few languages ever become sufficiently popular that they are used by more than a few people, but professional programmers may use dozens of languages in a career.

Most programming languages are not standardized by an international (or national) standard, even widely used ones, such as Perl or Standard ML (despite the name). Notable standardized programming languages include ALGOL, C, C++, JavaScript (under the name ECMAScript), Smalltalk, Prolog, Common Lisp, Scheme (IEEE standard), ISLISP, Ada, Fortran, COBOL, SQL, and XQuery. (Full article...) -

Image 10

A Blender screenshot displaying the 3D test model Suzanne

Computer graphics deals with generating images and art with the aid of computers. Computer graphics is a core technology in digital photography, film, video games, digital art, cell phone and computer displays, and many specialized applications. A great deal of specialized hardware and software has been developed, with the displays of most devices being driven by computer graphics hardware. It is a vast and recently developed area of computer science. The phrase was coined in 1960 by computer graphics researchers Verne Hudson and William Fetter of Boeing. It is often abbreviated as CG, or typically in the context of film as computer generated imagery (CGI). The non-artistic aspects of computer graphics are the subject of computer science research.

Some topics in computer graphics include user interface design, sprite graphics, rendering, ray tracing, geometry processing, computer animation, vector graphics, 3D modeling, shaders, GPU design, implicit surfaces, visualization, scientific computing, image processing, computational photography, scientific visualization, computational geometry and computer vision, among others. The overall methodology depends heavily on the underlying sciences of geometry, optics, physics, and perception.

Computer graphics is responsible for displaying art and image data effectively and meaningfully to the consumer. It is also used for processing image data received from the physical world, such as photo and video content. Computer graphics development has had a significant impact on many types of media and has revolutionized animation, movies, advertising, and video games, in general. (Full article...) -

Image 11

Lisp (historically LISP, an abbreviation of "list processing") is a family of programming languages with a long history and a distinctive, fully parenthesized prefix notation.

Originally specified in the late 1950s, it is the second-oldest high-level programming language still in common use, after Fortran. Lisp has changed since its early days, and many dialects have existed over its history. Today, the best-known general-purpose Lisp dialects are Common Lisp, Scheme, Racket, and Clojure.

Lisp was originally created as a practical mathematical notation for computer programs, influenced by (though not originally derived from) the notation of Alonzo Church's lambda calculus. It quickly became a favored programming language for artificial intelligence (AI) research. As one of the earliest programming languages, Lisp pioneered many ideas in computer science, including tree data structures, automatic storage management, dynamic typing, conditionals, higher-order functions, recursion, the self-hosting compiler, and the read–eval–print loop.

The name LISP derives from "LISt Processor". Linked lists are one of Lisp's major data structures, and Lisp source code is made of lists. Thus, Lisp programs can manipulate source code as a data structure, giving rise to the macro systems that allow programmers to create new syntax or new domain-specific languages embedded in Lisp. (Full article...) -

Image 12

Tcl (pronounced "tickle" or as an initialism) is a high-level, general-purpose, interpreted, dynamic programming language. It was designed with the goal of being very simple but powerful. Tcl casts everything into the mold of a command, even programming constructs like variable assignment and procedure definition. Tcl supports multiple programming paradigms, including object-oriented, imperative, functional, and procedural styles.

It is commonly used embedded into C applications, for rapid prototyping, scripted applications, GUIs, and testing. Tcl interpreters are available for many operating systems, allowing Tcl code to run on a wide variety of systems. Because Tcl is a very compact language, it is used on embedded systems platforms, both in its full form and in several other small-footprint versions.

The popular combination of Tcl with the Tk extension is referred to as Tcl/Tk (pronounced "tickle teak" or as an initialism) and enables building a graphical user interface (GUI) natively in Tcl. Tcl/Tk is included in the standard Python installation in the form of Tkinter. (Full article...) -

Image 13

Haskell (/ˈhæskəl/) is a general-purpose, statically-typed, purely functional programming language with type inference and lazy evaluation. Designed for teaching, research, and industrial applications, Haskell has pioneered several programming language features such as type classes, which enable type-safe operator overloading, and monadic input/output (IO). It is named after logician Haskell Curry. Haskell's main implementation is the Glasgow Haskell Compiler (GHC).

Haskell's semantics are historically based on those of the Miranda programming language, which served to focus the efforts of the initial Haskell working group. The last formal specification of the language was made in July 2010, while the development of GHC continues to expand Haskell via language extensions.

Haskell is used in academia and industry. As of May 2021[update], Haskell was the 28th most popular programming language by Google searches for tutorials, and made up less than 1% of active users on the GitHub source code repository. (Full article...) -

Image 14

Stephen Gary Wozniak (/ˈwɒzniæk/; born August 11, 1950), also known by his nickname Woz, is an American technology entrepreneur, electrical engineer, computer programmer, philanthropist, and inventor. In 1976, he co-founded Apple Computer with his early business partner Steve Jobs. Through his work at Apple in the 1970s and 1980s, he is widely recognized as one of the most prominent pioneers of the personal computer revolution.

In 1975, Wozniak started developing the Apple I into the computer that launched Apple when he and Jobs first began marketing it the following year. He was the primary designer of the Apple II, introduced in 1977, known as one of the first highly successful mass-produced microcomputers, while Jobs oversaw the development of its foam-molded plastic case and early Apple employee Rod Holt developed its switching power supply.

With human–computer interface expert Jef Raskin, Wozniak had a major influence over the initial development of the original Macintosh concepts from 1979 to 1981, when Jobs took over the project following Wozniak's brief departure from the company due to a traumatic airplane accident. After permanently leaving Apple in 1985, Wozniak founded CL 9 and created the first programmable universal remote, released in 1987. He then pursued several other businesses and philanthropic ventures throughout his career, focusing largely on technology in K–12 schools. (Full article...) -

Image 15Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals. Such machines may be called AIs.

High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., ChatGPT and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go). However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."

Various subfields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include reasoning, knowledge representation, planning, learning, natural language processing, perception, and support for robotics. General intelligence—the ability to complete any task performed by a human on an at least equal level—is among the field's long-term goals. To reach these goals, AI researchers have adapted and integrated a wide range of techniques, including search and mathematical optimization, formal logic, artificial neural networks, and methods based on statistics, operations research, and economics. AI also draws upon psychology, linguistics, philosophy, neuroscience, and other fields. (Full article...)

Selected images

-

Image 1Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley".

-

Image 2An IBM Port-A-Punch punched card

-

Image 3This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color.

-

Image 4Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language).

-

Image 5GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme

-

Image 6Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls.

-

Image 7A lone house. An image made using Blender 3D.

-

Image 8Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer.

-

Image 10Deep Blue was a chess-playing expert system run on a unique purpose-built IBM supercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum.

-

Image 11Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project.

-

Image 13Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005.

-

Image 16Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics.

-

Image 17A head crash on a modern hard disk drive

-

Image 18A view of the GNU nano Text editor version 6.0

Did you know? - load more entries

- ... that Brazilian computer science researcher and internet pioneer Tadao Takahashi negotiated with drug lords to install internet equipment in his country?

- ... that Rust has been named the "most loved programming language" every year for seven years since 2016 by annual surveys conducted by Stack Overflow?

- ... that the Gale–Shapley algorithm was used to assign medical students to residencies long before its publication by Gale and Shapley?

- ... that the software-testing framework pytest has been described as a key ecosystem project for the Python programming language?

- ... that the study of selection algorithms has been traced to an 1883 work of Lewis Carroll on how to award second place in single-elimination tournaments?

- ... that Makoto Soejima is the only International Mathematical Olympiad gold medalist with a perfect score to have won both the Google Code Jam and the Facebook Hacker Cup?

Subcategories

WikiProjects

- There are many users interested in computer programming, join them.

Computer programming news

No recent news

Topics

Related portals

Associated Wikimedia

The following Wikimedia Foundation sister projects provide more on this subject:

-

Commons

Free media repository -

Wikibooks

Free textbooks and manuals -

Wikidata

Free knowledge base -

Wikinews

Free-content news -

Wikiquote

Collection of quotations -

Wikisource

Free-content library -

Wikiversity

Free learning tools -

Wiktionary

Dictionary and thesaurus