Wikipedia:Village pump (proposals)/Archive 145

This page contains discussions that have been archived from Village pump (proposals). Please do not edit the contents of this page. If you wish to revive any of these discussions, either start a new thread or use the talk page associated with that topic.

< Older discussions · Archives: A, B, C, D, E, F, G, H, I, J, K, L, M, N, O, P, Q, R, S, T, U, V, W, X, Y, Z, AA, AB, AC, AD, AE, AF, AG, AH, AI, AJ, AK, AL, AM, AN, AO, AP, AQ, AR · 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184, 185, 186, 187, 188, 189, 190, 191, 192, 193, 194, 195, 196, 197, 198, 199, 200, 201, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214

RfC: Populating article descriptions magic word

The following discussion is closed. Please do not modify it. Subsequent comments should be made on the appropriate discussion page. No further edits should be made to this discussion.

In late March - early April 2017, Wikipedia:Village pump (proposals)/Archive 138#Rfc: Remove description taken from Wikidata from mobile view of en-WP ended with the WMF declaring[1] "we have decided to turn the wikidata descriptions feature off for enwiki for the time being."

In September 2017, it was found that through misunderstanding or miscommunication, this feature was only turned off for one subset of cases, but remained on enwiki for other things (in some apps, search results, ...) The effect of this description is that e.g. for 2 hours this week, everyone who searched for Henry VIII of England or saw it through those apps or in "related pages" or some such got the description "obey hitler"[2] (no idea how many people actually saw this, this Good Article is viewed some 13,000 times a day and is indefinitely semi-protected here to protect against such vandalism).

The discussion about this started in Wikipedia:Village pump (policy)/Archive 137#Wikidata descriptions still used on enwiki and continued mainly on Wikipedia talk:Wikidata/2017 State of affairs (you can find the discussions in Archive 5 up to Archive 12!). In the end, the WMF agreed to create a new magic word (name to be decided), to be implemented if all goes well near the end of February 2018, which will replace the use of the Wikidata descriptions on enwiki in all cases.

We now need to decide two things. Fram (talk) 09:58, 8 December 2017 (UTC)

How will we populate the magic word with local descriptions?

- Initially, copy the Wikidata descriptions by bot

- With a bot, use a stripped version of the first sentence of the article (the method described by User:David Eppstein and User:Alsee in Wikipedia talk:Wikidata/2017 State of affairs/Archive 5#Wikipedia descriptions vs Wikidata descriptions)

- With a bot, use information from the infobox (e.g. for people a country + occupation combination: "American singer", "Nepali politician", ...)

- Start with blanks and fill in manually (for all articles, or just for BLPs)

- Start with blanks, allowing to fill in manually and/or by bot (bot-filling after successful bot approval per usual procedures)

- Other

Discussion on initial population

- #5 – allows bot operations for larger or smaller sets of articles per criteria that don't have to be decided all at once, and manual overrides at all times. --Francis Schonken (talk) 10:28, 8 December 2017 (UTC)

- #5 is my preference following the reasoning below:

- Option 1, copying from Wikidata, will populate Wikipedia with a lot of really bad descriptions, which will remain until someone gets around to fixing them. My initial rough estimates are that there are more bad/nonexistent Wikidata descriptions than good ones. I strongly oppose this option unless and until someone comes up with solid data indicating that it will be a net gain.

- Option 2, extracting a useful description from the first sentence or paragraph seems a nice idea at first glance, but how will it be done? Has anyone promoting this option a good idea of how effective it would be, how long it would take, and if it would on average produce better descriptions than option 1? This option should be considered unsuitable until some evidence is provided that it is reasonably practicable and will do more good than harm.

- Option 3, copying from the infobox, may work for some of the articles that actually have an infobox with a useful short description, or components that can be assembled into a useful short description. This may work for a useful subset of articles, but it is not known yet how many. I would guess way less than half so not a good primary option.

- Option 4, start with blanks and fill in manually, is probably the only thing that can be done for a large proportion of articles, my guess in the order of half. It will have to be done, and is probably the de facto default. It is easy, quick and will do no harm. It is totally compatible with option 5, for which it is the first step.

- Option 5 is starting with option 4 and applying ad hoc local solutions which can be shown to be useful. Any harm is localised, Wikidata descriptions can be used when they are appropriate, extracts from leads can be used when appropriate, mashups from infoboxes can be used when appropriate, and manual input from people who actually know what the article is about can be used when appropriate. I think there is no better, simpler, and more practical option than this, and suggest that projects should consider how to deal with their articles. WPSCUBA already has manually entered short descriptions ready for use for more than half of its articles, which I provided as an experiment. It is fairly time consuming, but gets easier with practice. Some editors may find that this is a fun project, others will not, and there will inevitably be conflicts, which I suggest should be managed by BRD as simple content disagreements, to be discussed on talk pages and finalised by consensus. In effect, option 5 is the wiki way. It is simple and flexible, and likely to produce the best results with the least amount of damage. · · · Peter (Southwood) (talk): 11:26, 8 December 2017 (UTC)

- (There was an edit conflict here and I chose to group all my comments together · · · Peter (Southwood) (talk): 11:26, 8 December 2017 (UTC))

- #5. Whether a Wikidata description is suitable or not is very different across many groups of articles. It should be decided (and possibly bot populated) per group, sometimes per small group, and for that we need to start from blank descriptions.--Ymblanter (talk) 11:23, 8 December 2017 (UTC)

- Start with not using it anywhere, only use it as override per situation. —TheDJ (talk • contribs) 12:09, 8 December 2017 (UTC)

- TheDJ, To clarify, is this an Option 6: Other that you are proposing here? i.e. Only add the magic word to articles where the Wikidata short description is unsuitable, and use Wikidata description as default in all cases until someone finds a problem and adds a magic word, after which the short description will be taken from the magic word? If this is the case, what is your opinion on reverting to Wikidata description for any reason at a later date? · · · Peter (Southwood) (talk): 14:53, 8 December 2017 (UTC)

- @Pbsouthwood: Correct. I have no opinions on reverting at a later moment. —TheDJ (talk • contribs) 13:42, 11 December 2017 (UTC)

- TheDJ, To clarify, is this an Option 6: Other that you are proposing here? i.e. Only add the magic word to articles where the Wikidata short description is unsuitable, and use Wikidata description as default in all cases until someone finds a problem and adds a magic word, after which the short description will be taken from the magic word? If this is the case, what is your opinion on reverting to Wikidata description for any reason at a later date? · · · Peter (Southwood) (talk): 14:53, 8 December 2017 (UTC)

- #5. That doesn't deal with all the issues, but it comes closest to my views, given the choices. See also my comments at WT:Wikidata/2017 State of affairs/Archive 12 and

WT:Wikidata/2017 State of affairsnow archived to WT:Wikidata/2017 State of affairs/Archive 13. - Dank (push to talk) 14:06, 8 December 2017 (UTC)- Peter asked me for clarification. If people have specific questions, or if they want a summary of my previous posts, I'll do my best to answer. - Dank (push to talk) 15:30, 8 December 2017 (UTC)

- To whoever closes this: the discussion started here seems to be continuing at a new RfC at Usage of Wikidata links. - Dank (push to talk) 15:18, 16 January 2018 (UTC)

- #5 but don't wait too long to fill in where possible. Fram (talk) 14:14, 8 December 2017 (UTC)

- Fram Are you recommending a massive short term drive to produce short descriptions to make the system useful? · · · Peter (Southwood) (talk): 15:09, 8 December 2017 (UTC)

- Yes, although this isn't in my view necessary to proceed with this, only preferable. No descriptions is better than the current situations, but decent enwiki-based descriptions is in many cases better than no descriptions. No need to throw out the baby (descriptions to be shown in search and so on) with the bathwater. Fram (talk) 15:21, 8 December 2017 (UTC)

- #6 - don't use it. There has been no consensus to have this magic word in the first place - that is the question that should have been asked in this RfC (see discussion here). I personally think it is a bad idea and a waste of developer time. It's better to focus on improving the descriptions on Wikidata instead. Mike Peel (talk) 15:27, 8 December 2017 (UTC)

- #6 — Find a solution that monitors and updates Wikidata descriptions — If a description is good enough for Wikipedia(ns), it should be on Wikidata. If vandalism is blocked on Wikipedia, it should be simultaneously reverted on Wikidata. Wikidata is the hub for interwiki links and a storage site for both descriptions and structured data that then are harvested by external knowledge-based search engines (think Siri, Alexa, and Google's Knowledge Graph). For interwiki purposes, we should want to ensure that short descriptions at Wikidata are accurate, facilitating other language Wikipedias when they interlink to en.wiki. For external harvesting, we should want to prevent vandalism from being propagated. The problems regarding vandalism and sourcing on Wikidata are real, but the solution is for Wikipedians and our anti-vandalism bots to be able to easily monitor and edit the relevant Wikidata material. Possible solutions would include: (a) Implementing a pending changes-like functionality for changes to descriptions on high-traffic or contentious pages; (b) Make changes to short descriptions prominently visible on Wikipedia watchlists, inside the VisualEditor, and as a preference option for Wikipedia editors; (c) Develop and implement in-Wikipedia editing of Wikidata short descriptions using some kind of click-on-this-pencil tool.--Carwil (talk) 15:46, 8 December 2017 (UTC)

- After those solutions are implemented, you are free to ask for an rfC to overturn the consensus of the previous RfC which decided not to have these descriptions. This RfC is a discussion to get solutions which give you what you want on enwiki (descriptions in VE, mobile, ...) without interfering in what Wikidata does (they are free to have their own descriptions or to import ours). Fram (talk) 16:01, 8 December 2017 (UTC)

- Carwil, How do you propose that Wikipedia controls access by vandals to Wikidata? Are you suggesting that Wikipedia admins should be able to protect Wikidata items and block Wikidata users?

- The easy options are… "undo" functionality for Wikidata descriptions in Wikipedia watchlists, and option (a) I proposed above, something like pending-changes that protects pages on Wikipedia from unreviewed changes from Wikidata. Transferring anti-vandalism bots from Wikipedia to Wikidata would also be helpful.--Carwil (talk) 16:47, 8 December 2017 (UTC)

- Cluebot already runs on Wikidata. ChristianKl (talk) 15:19, 17 December 2017 (UTC)

- The easy options are… "undo" functionality for Wikidata descriptions in Wikipedia watchlists, and option (a) I proposed above, something like pending-changes that protects pages on Wikipedia from unreviewed changes from Wikidata. Transferring anti-vandalism bots from Wikipedia to Wikidata would also be helpful.--Carwil (talk) 16:47, 8 December 2017 (UTC)

- Strongly oppose 4 and strongly oppose 5—Let's reject any solution that mass-blanks short descriptions: these are a functional part of mobile browsing and of the VisualEditor. As an editor and a teacher who brings students into editing Wikipedia, the latter functionality is a crucial timesaver. Wikipedia is increasingly accessed by mobile devices and short descriptions prevent clicking through to a page only to find it's not the one you are looking for.--Carwil (talk) 15:46, 8 December 2017 (UTC)

- Note, this is a double vote, Carwil already expressed a bolded "support" above... Fram (talk) 10:11, 8 January 2018 (UTC)

- Have you analysed the overall usefulness of Wikidata descriptions and found that there are more good descriptions than bad, or found a way to find all the bad ones so they can be changed to good? If so please point to your methods and results, as they would be extremely valuable. What methods have you used to indicate the comparative harm done by bad descriptions versus the good done by good descriptions? · · · Peter (Southwood) (talk): 16:14, 8 December 2017 (UTC)

- Yes, I have analyzed a sample here. I found that 13 of 30 had adequate descriptions (though 7 of them could be improved), 13 had no descriptions at all, 1 was incorrectly described (not vandalism), 2 were redundant with the article title (i.e., they should be overridden with a blank), and 1 represented a case where the Wikipedia article and the Wikidata entity were not identical and shouldn't share the same description. The redundant descriptions would cause no harm. Mislabelling "Administrative divisions of Bolivia" with the subheading "administrative territorial entity of Bolivia" would cause mild confusion. The legibility provided by descriptions easily outweigh the harms. (The only compelling harm is due to vandalism, which should be addressed by improving vandalism tools not forking the descriptions between the projects.)--Carwil (talk) 16:47, 8 December 2017 (UTC)

- The options 4 and 5 are not to blank anything, they are to put short descriptions, which are text content, into the article they describe, where they can be properly, (or at least better), maintained, by people who may actually know what the article is about. Wikidata can use them if their terms of use allow, and if they are actually better for Wikidata's purposes, which is by no means clear at present. · · · Peter (Southwood) (talk): 16:14, 8 December 2017 (UTC)

- Options 4 and 5 involve starting with blanks everywhere. The whole proposal assumes that we should fork a dataset describing Wikipedia articles into two independently editable versions. Forking a dataset always creates inconsistencies and reduces the visibility of problems by splitting the number of eyes to watch for problems. Better to make Wikipedians' eyes more powerful and spotting problems (which are unusual) rather than to throw up wall between the two projects. My sample suggests that 90% of the time, or more, the two projects are working towards the same goal here.--Carwil (talk) 16:47, 8 December 2017 (UTC)

- They do, but it is unlikely the WMF will switch until the Wikipedia results are no worse than the Wikidata results, though I have no idea how they would measure that, since they don't seem to have much idea of the quality they will be comparing against, or if they do, are not keen on sharing it.

- The dataset does not suit Wikipedia. We should not be forced to use it. A dataset that suits Wikipedia may not suit Wikidata. Should we force it on them? Two datasets means Wikipedia can look after their own, and Wikidata can use what they find useful from it, and Wikipedians are not coerced into editing a project they did not sign up for. Using shitty quality data on Wikipedia to exert pressure on Wikipedians to edit Wikidata may have a backlash that will harm either or both projects, not a risk I would be willing to take, if it could affect my employment, unless of course I was being paid to damage the WMF, but that would be conspiracy theory, and frankly I think it unlikely.

- I also did a bit of a survey, my results do not agree with yours, and they are also from such a small sample as to be statistically unreliable. I also wrote short descriptions for about 600 articles in WPSCUBA, but did not keep records. Most (more than half) articles needed a new description as the Wikidata one either did nor exist or was inappropriate. There were some which were perfectly adequate, but less than half of the ones that actually existed, from memory. It would be possible to go back and count, but I think it would be a better use of my time to do new ones, if anyone is willing to join such a project. Maybe Wikiproject Medicine, or Biography, where quality actually may have real life consequences, but I don't usually work much in those fields and hesitate to move into them without some project participation. I have already run into occasional unfriendly reactions where projects overlap, but fortunately very few. · · · Peter (Southwood) (talk): 18:18, 8 December 2017 (UTC)

- Options 4 and 5 involve starting with blanks everywhere. The whole proposal assumes that we should fork a dataset describing Wikipedia articles into two independently editable versions. Forking a dataset always creates inconsistencies and reduces the visibility of problems by splitting the number of eyes to watch for problems. Better to make Wikipedians' eyes more powerful and spotting problems (which are unusual) rather than to throw up wall between the two projects. My sample suggests that 90% of the time, or more, the two projects are working towards the same goal here.--Carwil (talk) 16:47, 8 December 2017 (UTC)

- The options 4 and 5 are not to blank anything, they are to put short descriptions, which are text content, into the article they describe, where they can be properly, (or at least better), maintained, by people who may actually know what the article is about. Wikidata can use them if their terms of use allow, and if they are actually better for Wikidata's purposes, which is by no means clear at present. · · · Peter (Southwood) (talk): 16:14, 8 December 2017 (UTC)

- @Pbsouthwood: I don't think there's much daylight between Wikipedia's purpose for these descriptions (which hasn't been written yet), the value of them for the mobile app, the value of them for the VisualEditor (as disambiguators for making links), and the value for Wikidata as discussed here. There, the requirements include: "a short phrase designed to disambiguate items with the same or similar labels"; avoiding POV, bias, promotion, and controversial claims; and avoiding "information that is likely to change." Only the last one seems likely to differ from the ideal Wikipedia description and only marginally: e.g., "current president of the United States" would have to be replaced with "45th president of the United States."--Carwil (talk) 22:11, 12 December 2017 (UTC)

- There have been extensive discussions between community and WMF on the description issue. I wish this RFC had gone through a draft stage before posting. There may be other options or issues that may need to be sorted out, potentially affecting the outcome here. A followup RFC might be needed.

The previous RFC[3] consensus was clearly to eliminate wikidata-descriptions, and that is definitely my position. An alternate option would be to skip creating a description-keyword at all, and just take the description from the lead sentence. That has the benefits of (1) ensuring all articles automatically have descriptions (2) avoiding any work to create and maintenance on descriptions and (3) it would avoid creating a new independent independent issue of description-vandalism. The downside is that the lead sentence doesn't always make for a great short description.

If we go with a new description keyword, #5 #2 and #1 are all reasonable. (#3 and #4 are basically redundant to bot approval in #5). However as I note in the question below, #5 can be implemented with a temporary wikidata-default. This gives us time to start filling in local-descriptions before the wikidata-descriptions are shut off. This would avoid abruptly blanking descriptions. Alsee (talk) 21:49, 8 December 2017 (UTC) - #2, with #5 as a second preference. The autogenerated descriptions look like they're good enough for most purposes. Sandstein 16:14, 10 December 2017 (UTC)

- Sandstein, How big was your test sample, and how were the examples chosen? · · · Peter (Southwood) (talk): 16:36, 10 December 2017 (UTC)

- 5. Mass-importing WD content defeats the purpose of getting rid of WD descriptions. James (talk/contribs) 16:30, 11 December 2017 (UTC)

- Only true for a limited period until someone gets round to changing them where necessary. If the problem is big enough, there will be bot runs to do fixes, so over a medium term it does not make much difference as once the descriptions are in Wikipedia we can fix them as fast as we can make arrangements to do so and will no longer be handicapped by WP:ICANTHEARTHAT obfuscations from WMF. The important part is to get them where we have the control so we can start work getting them right. · · · Peter (Southwood) (talk): 07:47, 13 December 2017 (UTC)

- 5 Basically what Peter said. In some areas, the wikidata descriptions will be good. In others the first sentence stripping will be good. In some data from infoboxes can be used. Etcetera. Galobtter (pingó mió) 16:20, 19 December 2017 (UTC)

- 5 - Having just read the discussions on this I'm absolutely astounded that so much vandalism has taken place, Anyway back on point Wikidata is beyond useless when it comes to dealing with vandalism and as such 5 is the best way of dealing with it!. –Davey2010 Merry Xmas / Happy New Year 23:24, 27 December 2017 (UTC)

- Combination of 1 and 5. Important but keep hidden until reviewed on WP. Doc James (talk · contribs · email) 06:19, 30 December 2017 (UTC)

- #6 Retain Wikidata descriptions and bypass only those not needed Eventually all Wikipedias will have to use Wikidata. Moving back and forth make no much sense. The only thing we could do is possibly add functions to update Wikidata directly and retain functionality to bypass magic word locally. -- Magioladitis (talk) 15:56, 30 December 2017 (UTC)

- Circular reasoning. "Use Wikidata because we will have to use Wikidata"? Fram (talk) 10:11, 8 January 2018 (UTC)

- 5 - For reasons stated by others above. Tony Tan · talk 04:17, 17 January 2018 (UTC)

- 5 - Per above. (Edit just to clarify, at the point I edited this subsection, Fish had not closed the discussion - as they closed the entire thread, there was no conflict and my !vote does not affect outcome anyway) Only in death does duty end (talk) 13:02, 6 February 2018 (UTC)

What to do with blanks

What should we do when there is no magic word, or the magic word has no value?

- Show the Wikidata description instead

- Show no description

- Show no description for a predefined list of cases (lists, disambiguation pages, ...) and the Wikidata one otherwise (this is the solution advocated by User:DannyH (WMF) at the moment)

- Other

- A transition from #1 to #2. In the initial stage, any article that lacks a local description will continue to draw a description from Wikidata. We deploy the new description keyword and start filling in local descriptions which override Wikidata descriptions. Once we have built a sufficient base of local descriptions, we finalize the transition by switching-off Wikidata descriptions completely. (Note: Added 16:34, 6 January 2018 (UTC). Previous discussion participants have been pinged to discuss this new option in subsection Filling in blanks: option #5.)

Discussion on blanks

- #2 – comes closest to having no description per initial aborted RfC; those who want them can write them, or fill in automatically (per usual bot approval procedures). --Francis Schonken (talk) 10:28, 8 December 2017 (UTC)

- No reasonable variant/alternative/compromise has been proposed since I supported #2 a month ago. Please replace DannyH as an intermediary (not the first time I suggest this): they have been pretty clear about their inability to propose anything tangible. The well-being of the Wikipedia project should not be left in the hands of those who are a paid to improve the project but can deliver next to nothing. --Francis Schonken (talk) 10:37, 8 January 2018 (UTC)

- #5 as a reasonable compromise per various discussions below. · · · Peter (Southwood) (talk): 18:24, 6 January 2018 (UTC)

#2 The Wikidata description should not be allowed as a default where there is no useful purpose to be served by a short description. An empty parameter to the magic word must be respected as a Wikipedia editorial decision that no short description is wanted. This decision can always be discussed on the talk page. Under no circumstances should WMF force an unwanted short description from Wikidata as a default. Nothing stops anyone from manually adding a description which is also used by Wikidata, but that is a personal decision of the editor and they take personal responsibility as for any other edit. Automatically providing no description for a predefined list of classes has problems, in that those classes may not be as easily defined as some people might like to think. For example, most list articles don't need a short description, but some do. The same may be true for disambiguation pages. Leaving them blank as the first stage and not displaying a short description until a (hopefully competent) editor has added one is easy to manage for the edge cases, and may be managed by other methods per option 5 of population. It is flexible and can deal with all possibilities. There is no need to make it more complicated and liable to break some time. Ideally the magic word could be given a comment in place of a parameter where an explanation of why there should not be a short description would be useful. In this case the comment should not be displayed and is there to inform editors who might wonder if it had been missed. · · · Peter (Southwood) (talk): 11:43, 8 December 2017 (UTC) - #1 - Show the wikidata description in stead. —TheDJ (talk • contribs) 12:10, 8 December 2017 (UTC)

- #2. No magic word (and magic word with no parameter) should result in no description, not some non-enwiki data being confusingly shown to readers (while being missed by most vandalism patrollers apparently). Today, for 8 hours, we had this blatant BLP violation on a page with 10,000 pageviews per day. Using these descriptions by default (or at all) is a bad idea, and was rejected at the previous RfC. Fram (talk) 14:21, 8 December 2017 (UTC)

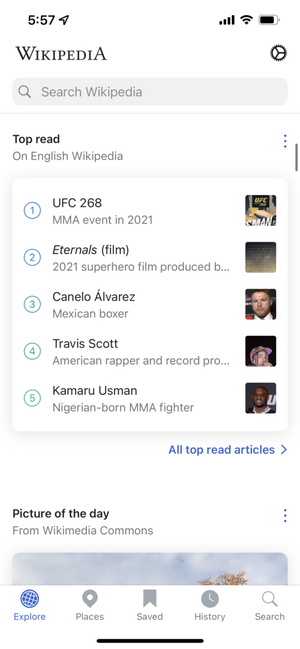

- #1 - From the WMF: We're proposing using Wikidata as the fallback default if there isn't a defined magic word on Wikipedia, because short descriptions are useful for readers (on the app in search results, in the top read module, at the top of article pages) and for editors (in the Visual Editor link dialog). For example: in the top read module from September pictured here, 3 of the 5 top articles benefit from having a short description -- I don't know who Gennady Golovkin and Canelo Álvarez are, and having them described as "Kazakhstani boxer" and "Mexican boxer" tells me whether I'm going to be interested in clicking on those. (The answer on that is no, I'm not really a boxing guy.) I know that Mother! is a 2017 film, but I'm sure there are lots of people who would find that article title completely baffling without the description. Clicking through to the full list of top read articles, there are a lot of names that people wouldn't know -- Amber Tamblyn, Arjan Singh, Goran Dragić. This is a really popular feature on the apps, and it would be next to useless without the descriptions.

- We want to create the magic word, so that Wikipedia editors have editorial control over the descriptions, which they should. But if the magic word is left blank on Wikipedia -- especially in the cases where Wikipedia editors haven't written a description yet -- then for the vast majority of cases, showing the description from Wikidata is better than not showing anything at all. As a reader looking at that top read module, I want to know who Gennady Golovkin is, and the module should say "Kazakhstani boxer," whether that text comes from Wikipedia or Wikidata.

- I know that a big reason why people are concerned about showing the Wikidata descriptions is that the Wikidata community may sometimes be slower than the Wikipedia community to pick up on specific examples of vandalism. The example that Fram cites of Henry VIII of England showing "obey hitler" for two hours is disappointing and frustrating. However, I think that the best solution there should be to improve the community's ability to monitor the short descriptions, so that vandalism or mistakes can be spotted and reverted more quickly. The Wikidata team has been working on providing more granular display in watchlists on Wikipedia, so that Wikipedia editors can see edits to the descriptions for the articles that they're watching, without getting buried by other irrelevant edits made to that Wikidata item. That work is being tracked in this Phabricator ticket -- phab:T90436 -- but I'm not sure what the current status is. Ping for User:Lydia Pintscher (WMDE) -- do you know how this is progressing?

- Sorry for only getting back to this now. It slipped through. So we have continued working on improving which changes show up in the recent changes and watchlist here from Wikidata. Specifically we have put a lot of work into scaling the current system, which is a requirement for any further improvements. We have made the changes we are sending smaller and we have made it so that less changes are send from Wikidata to Wikipedia. We have also rolled out fine-grained usage tracking on more wikis (cawiki, cewiki, kowiki, trwiki) to see how it scales. With fine-grained usage tracking you will no longer see changes in recent changes and watchlist that do not actually affect an article like it is happening now. The roll-outs on these wikis so far looks promising. In January we will continue rolling it out to more wikis and see if it scales enough for enwiki. At the same time we will talk to various teams at the developer summit in January to brainstorm other ways to make the system scale better or overhaul it. --Lydia Pintscher (WMDE) (talk) 09:31, 19 December 2017 (UTC)

- We've talked in the previous discussions about types of pages where the Wikidata descriptions aren't useful for article display, because they're describing the page itself, rather than the subject of the article. The examples that I know right now are category pages (currently "Wikimedia category page"), disambiguation pages ("Wikimedia disambiguation page"), list pages, and the main page. Those may be helpful in the case of the VE link dialog, especially "disambiguation page", but there's no reason to display those at the top of the article page, where they look redundant and kind of silly. We're proposing that we just filter those out of the article page display, and anywhere else where they're unnecessary. I'd like to know more examples of pages where short descriptions aren't useful, if people know any.

- For article pages, I don't know of any examples so far where a blank description would be better for the people who need them (people reading, searching or adding links on VE). If we're going to build the "show a blank description" feature, then we need to talk about specific use cases where that would be the best outcome. That's how product development works -- you don't build a feature, if you don't have any examples for where it would be useful. If people have specific examples, then that would help a lot. -- DannyH (WMF) (talk) 14:58, 8 December 2017 (UTC)

- "For article pages, I don't know of any examples so far where a blank description would be better " Check the two examples of vandalism on pages with 10K+ pageviews per day I gave in this very discussion, including one very blatant BLP violation which lasted for 8 hours today. In these examples, a blank description would have been far preferable over the vandalized one, no? Both articles, by the way, are semi-protected here, so that vandalism couldn't have done by the IPs here (and would very likely have been caught much earlier). "specific use cases where that would be the best outcome." = all articles, and certainly BLPs. Fram (talk) 15:19, 8 December 2017 (UTC)

- If you want another example of where no description would be preferable over the Wikidata one, look to the right. This is what people who search for WWII (or have it in "related articles", the mobile app, ... see right now and have seen since more than 5 hours (it will undoubtedly soon be reverted now that I have posted this here). This kind of thing happens every day, and way too often on some of our most-viewed pages. Fram (talk) 15:38, 8 December 2017 (UTC)

- I agree that the vandalism response rate on Wikidata is sometimes too slow. I think the solution to that is to make that response rate better, by making it easier for Wikipedia editors to monitor and fix vandalism of the descriptions. I disagree that the best solution is to pre-emptively blank descriptions because we know that there's a possibility that they'll be vandalized. I'm asking for specific examples where editors would make the choice to not show a description on the article page, because a blank description is better than the majority of good-to-adequate descriptions already on Wikidata. -- DannyH (WMF) (talk) 16:10, 8 December 2017 (UTC)

- And I am saying that this is a red herring. Firstly, you claim that there exists a majority of good-to adequate descriptions on Wikidata, without any convincing evidence that this is the case. I am stating that out of several hundred short descriptions that I produced, there were a non-zero number of cases where a short description made no apparent improvement over the article title by itself. · · · Peter (Southwood) (talk): 16:21, 8 December 2017 (UTC)

- I agree that the vandalism response rate on Wikidata is sometimes too slow. I think the solution to that is to make that response rate better, by making it easier for Wikipedia editors to monitor and fix vandalism of the descriptions. I disagree that the best solution is to pre-emptively blank descriptions because we know that there's a possibility that they'll be vandalized. I'm asking for specific examples where editors would make the choice to not show a description on the article page, because a blank description is better than the majority of good-to-adequate descriptions already on Wikidata. -- DannyH (WMF) (talk) 16:10, 8 December 2017 (UTC)

- DannyH (WMF), Filtering descriptions out of the article page view means that they will be invisible for maintenance which is very bad, unless they are filtered out based on content, not on page type, which may be technically problematic - you tell me, I don't write filter code. Can you guarantee that no vandalism can sneak through by this route?. As long as they are visible anywhere in association with the Wikipedia article they are a Wikipedia editorial issue. · · · Peter (Southwood) (talk): 15:39, 8 December 2017 (UTC)

- We are not asking for a development feature to leave out descriptions that don't exist, it is the simplest possible default. Please try to accept that simply displaying whatever content is in the magic word parameter is the simplest and most versatile solution, and that if we leave it blank that is because we prefer it to be left blank. If anyone prefers to have a short description in any of these cases, they can edit Wikipedia to put in the one they think is right, and if anyone disagrees strongly enough to want to remove it, they can follow standard procedure for editorial disagreement, which is get consensus on the talk page. It is not rocket science, it is the Wikipedia way of doing these things. If it is difficult for the magic word to handle a comment in the parameter we can simply put the comment outside. There may be a few more cases where people will fail to notice that it is there, but probably not a train smash. Is there any reason why a comment in the parameter space should not be parsed as equivalent to no description? I have asked this before, and am still waiting for an answer.· · · Peter (Southwood) (talk): 15:39, 8 December 2017 (UTC)

- If you want another example of where no description would be preferable over the Wikidata one, look to the right. This is what people who search for WWII (or have it in "related articles", the mobile app, ... see right now and have seen since more than 5 hours (it will undoubtedly soon be reverted now that I have posted this here). This kind of thing happens every day, and way too often on some of our most-viewed pages. Fram (talk) 15:38, 8 December 2017 (UTC)

- #1 - and focus on improving the descriptions on Wikidata. Mike Peel (talk) 15:27, 8 December 2017 (UTC)

- See discussion at Wikipedia_talk:Wikidata/2017_State_of_affairs#Circular_"sourcing"_on_Wikidata - I've posted a random sample of 1,000 articles and descriptions, of which only 1 description had a typo and none seemed to be blatently wrong - although 39% don't yet have a description. So let's add those extra descriptions / improve the existing ones, rather that forking the system. Thanks. Mike Peel (talk) 00:14, 12 December 2017 (UTC)

- That sample includes the many typical descriptions which are right on Wikidata and useless (or at least very unclear) for the average enwiki reader: "Wikimedia disambiguation page" (what is Wikimedia, shouldn't that be Wikipedia, and even then, I know I'm on Wikipedia, and we don't use "Wikipedia article" as description for standard articles either...) There are also further typos ("British Slavation Army officer"), useless descriptions ("human settlement", can we be slightly more precise please), redundant ones (Shine On (Ralph Stanley album) - "album by Ralph Stanley")... And the basic issue, that language-based issues shouldn't be maintained at Wikidata but at the specific languages, is not "forking", it is taking back content which doesn't belong at Wikidata but at enwiki. Fram (talk) 05:45, 12 December 2017 (UTC)

- You may add "Descriptions not in English" to the problems list from that sample: "Engels; schilder; 1919; Londen (Engeland); 1984". Fram (talk) 06:01, 12 December 2017 (UTC)

- And "determined sex of an animal or plant. Use Q6581097 for a male human" is not really suitable for use on enwiki either (but presumably perfect for Wikidata). Neeraj Grover murder case - "TV Executive" seems like the wrong description as well. Stefan Terzić - "Team handball" could also use some improvement. Fram (talk) 07:56, 12 December 2017 (UTC)

- OK, so maybe 4/1000 have typos/aren't in English/are wrong - that's still not bad. Most of the rest seems to be WP:IDONTLIKEIT (where I'd say WP:SOFIXIT on Wikidata, but you don't want to do that). Yes, it is forking - the descriptions currently only exist on Wikidata (we've never had them on Wikipedia), and they aren't going away because of this - so you want to fork them, and in a way that means the two systems can't later be unforked (due to licensing issues). That's not helpful, particularly in the long term. Mike Peel (talk) 19:58, 12 December 2017 (UTC)

- I gave more than 4 examples, some 40% don't have a description (so can hardly be wrong, even if many of those need a description), and many have descriptions we can't or shouldn't use. Basically, you started with 0.1% problem in your view, when it is closer to 50% in reality. Please indicate which licensing issues you see which would make unforking impossible. It seems that these non-issues would then also make it impossible to import the Wikidata descriptions, no? Seems like a red herring to me. By the way, have you ever complained about forking when Wikidata was populated with millions of items from enwiki (and other languages), where from then on they might evolve separately? Or is forking only an issue when it is done from Wikidata to enwiki, and not the reverse? Fram (talk) 22:28, 12 December 2017 (UTC)

- Only a few of your example problems seem to be actual problems, the rest are subjective. You're proposing that we switch to 100% without description, so I can't see how you can argue about the 40% blank descriptions (and they weren't a problem at the start of this discussion). I'm not saying 0.1%, but ~1% seems reasonable here. Enwp descriptions are CC-BY-SA licensed, which means they can't be simply copied to Wikidata as that has a CC-0 license (and yes, this isn't great, and copyrighting the simple descriptions doesn't make any sense, but it is what it is) - although that means that we can still copy from Wikidata to here if needed. I'm complaining that we're forking things here to do the same task (describing topics), and that we're trying to do so using the wrong tool (free text with hacks) rather than a better tool (a structured database). Mike Peel (talk) 23:01, 12 December 2017 (UTC)

- Ah, the old "structured database" vs "free text with hacks" claim, I wondered why it wasn't mentioned yet. In Wikidata, you are putting free text in a database field, which then at runtime gets read and displayed. In enwiki, you are putting free text in a "magic word" template, which then at runtime gets read and displayed. Pretending that the descriptions in Wikidata aren't free text and in enwiki are free text is not really convincing. However, what is the wrong tool for the task is Wikidata, as that is not part of the enwiki page history and wikitext, and thus can't be adequately monitored, protected, ... The only "hack" is the current one, using Wikidata to do something enwiki can do better (and which philosophically also belongs on enwiki, as it is language-based text, not some universally accepted value). Fram (talk) 07:53, 13 December 2017 (UTC)

- Only a few of your example problems seem to be actual problems, the rest are subjective. You're proposing that we switch to 100% without description, so I can't see how you can argue about the 40% blank descriptions (and they weren't a problem at the start of this discussion). I'm not saying 0.1%, but ~1% seems reasonable here. Enwp descriptions are CC-BY-SA licensed, which means they can't be simply copied to Wikidata as that has a CC-0 license (and yes, this isn't great, and copyrighting the simple descriptions doesn't make any sense, but it is what it is) - although that means that we can still copy from Wikidata to here if needed. I'm complaining that we're forking things here to do the same task (describing topics), and that we're trying to do so using the wrong tool (free text with hacks) rather than a better tool (a structured database). Mike Peel (talk) 23:01, 12 December 2017 (UTC)

- I gave more than 4 examples, some 40% don't have a description (so can hardly be wrong, even if many of those need a description), and many have descriptions we can't or shouldn't use. Basically, you started with 0.1% problem in your view, when it is closer to 50% in reality. Please indicate which licensing issues you see which would make unforking impossible. It seems that these non-issues would then also make it impossible to import the Wikidata descriptions, no? Seems like a red herring to me. By the way, have you ever complained about forking when Wikidata was populated with millions of items from enwiki (and other languages), where from then on they might evolve separately? Or is forking only an issue when it is done from Wikidata to enwiki, and not the reverse? Fram (talk) 22:28, 12 December 2017 (UTC)

- OK, so maybe 4/1000 have typos/aren't in English/are wrong - that's still not bad. Most of the rest seems to be WP:IDONTLIKEIT (where I'd say WP:SOFIXIT on Wikidata, but you don't want to do that). Yes, it is forking - the descriptions currently only exist on Wikidata (we've never had them on Wikipedia), and they aren't going away because of this - so you want to fork them, and in a way that means the two systems can't later be unforked (due to licensing issues). That's not helpful, particularly in the long term. Mike Peel (talk) 19:58, 12 December 2017 (UTC)

- See discussion at Wikipedia_talk:Wikidata/2017_State_of_affairs#Circular_"sourcing"_on_Wikidata - I've posted a random sample of 1,000 articles and descriptions, of which only 1 description had a typo and none seemed to be blatently wrong - although 39% don't yet have a description. So let's add those extra descriptions / improve the existing ones, rather that forking the system. Thanks. Mike Peel (talk) 00:14, 12 December 2017 (UTC)

- #2, or transition from #1 to #2. I have engaged significant discussions with the WMF on the descriptions-issue on the Wikidata/2017 State of affairs talk page. The WMF has valid concerns about abruptly blanking descriptions, and we should try to cooperate on those concerns. Temporarily letting a blank keyword default to wikidata (#1) will give us time to begin filling empty local descriptions before shutting off wikidata descriptions (#2). But in the long run my position is definitely #2. Alsee (talk) 21:02, 8 December 2017 (UTC) Adding explicit support for #5, which is essentially matches my original !vote. Alsee (talk) 16:36, 6 January 2018 (UTC)

- This could work. While we are filling in short descriptions, whenever we find an article that should not have a short description, we could put in a non-breaking space to override an unnecessary Wikidata description. We will need to see the actual display shown on mobile on desktop too, so we can see what we are doing. As long as there is a display of the short description in actual use on desktop, it might be unnecessary to switch. That would reduce the pressure to rush the process, which may be a good thing, but also may not. · · · Peter (Southwood) (talk): 10:12, 9 December 2017 (UTC)

- Alsee, thanks. I've been staying out of conversations about if/when/how the magic word gets used/populated, because I think those are the content decisions that need to be made by the English WP community. I want to figure out how we can get to the place where Wikipedia editors have proper editorial control over the short descriptions, without hurting the experience of the readers and editors who are using those descriptions now. -- DannyH (WMF) (talk) 23:29, 11 December 2017 (UTC)

- You can enable a view of the Q-code, short description and alias via this script: [[4]].--Carwil (talk) 13:01, 9 December 2017 (UTC)

- Carwil, This is exactly the kind of display I had in mind. It is easily visible, but obviously not part of the article per se, as it is displayed with other metadata in a different text size. To be useful it would have to be visible to all editors who might make improvements to poor quality descriptions, so would have to be a default display on desktop. This may not be well received by all, but it would be useful, maybe as an opt-out for those who really do not want to know. It still does not deal with the inherent problems of having the description on Wikidata, in that it is not Wikipedia and we do not dictate Wikidata's content policies, control their page protection, block their vandals etc, but it does let us see what is there, and fixing is actually quite easy, though maybe I am biased as I have done a fair amount of work on Wikidata. I would be interested to hear the opinions of people who have not previously edited Wikidata on using this script. I can definitely recommend it to anyone who wants to monitor the Wikidata description. Kudos to Yair rand.· · · Peter (Southwood) (talk): 16:15, 9 December 2017 (UTC)

- It also does not solve the problem of different needs for the description. When the Wikidata description is unsuitable for Wikipedia, we should not arbitrarily change it if it is well suited to Wikidata's purposes, but if it is going to be used for Wikipedia, we may have to do just that.· · · Peter (Southwood) (talk): 16:21, 9 December 2017 (UTC)

- You can enable a view of the Q-code, short description and alias via this script: [[4]].--Carwil (talk) 13:01, 9 December 2017 (UTC)

- #2. Any Wikidata import should be avoided because that content is not subject to Wikipedia editorial control and consensus. Sandstein 16:16, 10 December 2017 (UTC)

- Sandstein, My personal preference is that eventually all short descriptions should be part of Wikipedia, and not imported in run time, however, as an interim measure, to get things moving more quickly, I see some value in initially displaying the Wikidata description as a default for a blank magic word parameter, as it is no worse than what WMF are already doing, and in my opinion are likely to continue doing until they think the Wikipedia local descriptions are better on average. If anyone finds a Wikidata description on display that is unsuitable, all they have to do is insert a better one in the magic word and it immediately becomes a part of Wikipedia. If you find a Wikidata description that is good, you can also insert it into the magic word and make it local, as they are necessarily CC0 licensed. The only limitation on getting 100% local content is how much effort we as Wikipedians are prepared to put into it. Supporters of Wikidata can improve descriptions on Wikidata instead if that is what they prefer to do, and as long as a good short description is displayed, it may happen that nobody feels strongly enough to stop allowing it to be used. I predict that whenever a vandalised description is spotted, most Wikipedians will provide a local short description, so anyone in favour of using Wikidata descriptions would be encouraged to work out how to reduce vandalism and get it fixed faster, which will greatly improve Wikidata. Everybody wins, maybe not as much as either side would prefer, but more than they might otherwise. As it would happen, WMF win the most, but annoying as that may be to some, we can live with it as long as we also have a net gain for Wikipedia and Wikidata. · · · Peter (Southwood) (talk): 16:58, 10 December 2017 (UTC)

- 2. We have neither the responsibility nor the authority to enforce WP guidelines on a project with diametrically opposed policies. Content outside of WP's editorial control should not appear on our pages, period. James (talk/contribs) 16:34, 11 December 2017 (UTC)

- 2 comes closest to my views, given the choices. See my comments at WT:Wikidata/2017 State of affairs/Archive 12 and WT:Wikidata/2017 State of affairs. Also see the RfC from March; most of what was said there is equally relevant to the current question. - Dank (push to talk) 21:01, 11 December 2017 (UTC)

- Comment from WMF: I want to say a word about compromise and consensus. I've been involved in these discussions for almost three months now, and there are a few things that I've been consistent about.

- First is that I recognize and agree that the existing feature doesn't allow Wikipedia editors to have editorial control over the descriptions, and it's too difficult for Wikipedia editors to see the existing descriptions, monitor changes, and fix problems when they arise. Those are problems that need to be fixed, by the WMF product team and/or the Wikidata team.

- Second: the way that we fix this problem doesn't involve us making the editorial decisions about the format or the content. That's up to the English Wikipedia and Wikidata communities, and if there's disagreement between people in those communities, then ultimate control should be located on Wikipedia and not on Wikidata. In other words: when we build the magic word, we're not going to control how it's used, how often, or what the format should be. I think that both of these two points are in line with what most of the people here are saying.

- The third thing is that we're not going to agree to a course of action that results in the mass blanking of existing descriptions, for any meaningful length of time. I recognize that that's something that most of the people here want us to build, but that would be harmful to the readers and editors that use those descriptions, and that matters. This solution needs to have consensus with us, too, because we're the ones who are going to build it. I'm not saying that we're going to ignore the consensus of this discussion; I'm saying that we need to be a part of that consensus. -- DannyH (WMF) (talk) 15:13, 12 December 2017 (UTC)

- How many people have actually complained in the 8 months or so that descriptions have now been disabled in mobile view? "readers and editors that use those descriptions": which editors would that be? Anyway, basically you are not going to interfere in content decisions, unless you don't like the result. But at the same time you can't be bothered to provide the necessary tools to patrol and control your features (and your first point is rather moot when this magic word goes live and works as requested anyway). Which is the same thing you did (personally and as WMF) with Flow, Gather, ... which then didn't get changed, improved, gradually accepted, but simply shot down in flames, at the same time creating lots of unnecessary friction and bad blood. Have you actually learned anything from those debacles? Most people here actually want to have descriptions, and these will be filled quite rapidly (likely to a higher percentage than what is provided now at Wikidata). But we will fill them where necessary, and we will leave them blank where we want them to be blank. You could have suggested over the past few months a compromise, where either "no magic word" or "magic word with no description" would mean "take the wikidata description", and the other meant "no description". You could have suggested "after the magic word is installed, we'll take a transitional period of three months, to see if the descriptions get populated here on enwiki; afterwards we'll disable the "fetch desc from wikidata" completely". Instead you insisted that the WMF would have the final say and would not allow blanks unless it was for a WMF-preapproved list of articles (or article groups). Why? No idea. If the WMF is so bothered that readers should get descriptions no matter what (even if many, many articles don't have Wikidata descriptions anyway in the first place), then they should hire and pay some people to monitor these and make sure that e.g. blatant BLP violations don't remain for hours or days. But forcing us to display non-enwiki content against our will and without providing any serious help in patrolling it is just not acceptable. Fram (talk) 15:44, 12 December 2017 (UTC)

- Fram, those compromises are what I'm asking for us to discuss. I'm glad you're bringing them up, that's a conversation that we can have. I'm going to be talking to the Wikidata team next week about the progress on building the patrolling and moderation tools. We don't have direct control over what the Wikidata team chooses to do, but I want to talk with them about how the continued lack of a way to effectively monitor the short descriptions is affecting this conversation, this community, and the feature as a whole. English Wikipedia editors need to have the tools to effectively populate and monitor the descriptions, and you need to have that on a timeline that makes sense. I need to talk to more people, and keep working on how to make that happen. I'm going to talk with people internally about the transitional period that you're suggesting. -- DannyH (WMF) (talk) 16:04, 12 December 2017 (UTC)

- I think the major concern is the lack of control over enwp content. There are currently only two outside sources of enwp content over which the local community has no control: Commons and Wikidata; it has taken some years for Commons to build a level of trust over their content policies and failsafes to prevent abuse at enwp through Commons. The only reason today that the use of Commons materials here is two-fold 1) they've proven they can handle their business, and 2) there exists local over-rides that are transparent and easy to enact. For Wikidata to be useful and to avoid the kind of acrimony we are seeing here, we would need the SAME thing from Wikidata. Point 1) can only occur over time, and Wikidata is far too new to be proven in that direction. Recent gaffes in allowing vandalism off-site at Wikidata to perpetuate at enwp does not help either. If the enwp community is going to feel good about allowing Wikidata to be useful going forward, until that trust reaches what Commons has achieved, we need point 2 more than anything. Defaulting to local control over off-site control is necessary, and any top-down policy that removes local control, either directly or as a fait accompli by subtling controlling the technology, is unlikely to be workable. If Wikidata can prove their ability to take care of their own business reliably over many years, the local community would feel better about handing some of that local control over to them, as works with Commons now. But that cannot happen today, and it cannot happen if local overrides are not simple, robust, and the default. --Jayron32 17:32, 12 December 2017 (UTC)

- "English Wikipedia editors need to have the tools to effectively populate and monitor the descriptions, and you need to have that on a timeline that makes sense." You know, yiou have lost months doing this by continually stalling the discussions and "misinterpreting" comments (always in the same direction, which is strange for real misunderstandings and looks like wilful obstruction instead). You just give us the magic word, and then we have the tools to monitor the descriptions: recent changes, watchlists, page histories, ... plus tools like semi- or full protection and the like. We can even build filters to check for these changes specifically. We can build bots to populate them. From the very start, everyone or nearly everyone who was discussing these things with you has suggested or stated these things, you were the only one (or nearly the only one) creating obstacles and finding issues with these solutions where none existed. "I'm going to be talking to the Wikidata team next week about the progress on building the patrolling and moderation tools." is totally and utterly irrelevant for this discussion, even though it is something that is sorely needed in general. Patrolling and moderating Wikidata descriptions is something we are not going to do; we will patrol and moderate ENWIKI descriptions, and we have the tools to do so (a conversation may be needed whether the descriptions will be shown in the desktop version or not, this could best be a user preference, but that is not what you mean). Please stop fighting lost battles and get on with what is actually decided and needed instead. Fram (talk) 17:54, 12 December 2017 (UTC)

- I'm talking to several different groups right now -- the community here, the WMF product team, and the Wikidata team -- and I'm trying to get all those groups to a compromise that gives Wikipedia editors the control over these descriptions that you need, and doesn't result in mass blanking of descriptions for a meaningful amount of time. That's a process that takes time, and I'm still working with each of those groups. I know that there isn't much of a reason for you to believe or trust me on this. I'm just saying that's what I'm doing. -- DannyH (WMF) (talk) 18:02, 12 December 2017 (UTC)

- Indeed, I don't. I'm interested to hear why you would need to talk to the Wikidata team to find a compromise about something which won't affect the Wikidata team one bit, unless you still aren't planning on implementing the agreed upon solution and let enwiki decide how to deal with it. Fram (talk) 22:28, 12 December 2017 (UTC)

- Fram, you're saying "us", "we", etc. here rather freely. Please do not speak for all editors here, particularly when putting your own views forward at the same time. There's a reason we have RfC's... Thanks. Mike Peel (talk) 21:11, 12 December 2017 (UTC)

- Don't worry, I'm not speaking for you. But we (enwiki) had an RfC on this already, and it's the consensus from there (and what is currently the consensus at this RfC) I'm defending. There's indeed a reason we have RfC's, and some of us respect the results of those. Fram (talk) 22:28, 12 December 2017 (UTC)

- I'm glad you're not speaking for me - but why are you trying to speak for everyone except for me? What consensus are you talking about, this RfC is still running (although I'm worried that potential participants are being scared off by these arguments in the !vote sections)? And what consensuses are you accusing me of disrespecting? Mike Peel (talk) 22:40, 12 December 2017 (UTC)

- FWIW, Fram definitely speaks for me. James (talk/contribs) 23:24, 12 December 2017 (UTC)

- You don't really seem to care about the results of the previous RfC on this, just like you didn't respect the result of the WHS RfC when your solution was not to revert to non-Wikidata versions, but to bot-move the template uses to a /Wikidata subpage which was identical to the rejected template. Basically, when you have to choose between defending Wikidata use on enwiki or respecting RfCs, you go with the former more than the latter. Fram (talk) 07:53, 13 December 2017 (UTC)

- I'm glad you're not speaking for me - but why are you trying to speak for everyone except for me? What consensus are you talking about, this RfC is still running (although I'm worried that potential participants are being scared off by these arguments in the !vote sections)? And what consensuses are you accusing me of disrespecting? Mike Peel (talk) 22:40, 12 December 2017 (UTC)

- Don't worry, I'm not speaking for you. But we (enwiki) had an RfC on this already, and it's the consensus from there (and what is currently the consensus at this RfC) I'm defending. There's indeed a reason we have RfC's, and some of us respect the results of those. Fram (talk) 22:28, 12 December 2017 (UTC)

- I'm talking to several different groups right now -- the community here, the WMF product team, and the Wikidata team -- and I'm trying to get all those groups to a compromise that gives Wikipedia editors the control over these descriptions that you need, and doesn't result in mass blanking of descriptions for a meaningful amount of time. That's a process that takes time, and I'm still working with each of those groups. I know that there isn't much of a reason for you to believe or trust me on this. I'm just saying that's what I'm doing. -- DannyH (WMF) (talk) 18:02, 12 December 2017 (UTC)

- Fram, those compromises are what I'm asking for us to discuss. I'm glad you're bringing them up, that's a conversation that we can have. I'm going to be talking to the Wikidata team next week about the progress on building the patrolling and moderation tools. We don't have direct control over what the Wikidata team chooses to do, but I want to talk with them about how the continued lack of a way to effectively monitor the short descriptions is affecting this conversation, this community, and the feature as a whole. English Wikipedia editors need to have the tools to effectively populate and monitor the descriptions, and you need to have that on a timeline that makes sense. I need to talk to more people, and keep working on how to make that happen. I'm going to talk with people internally about the transitional period that you're suggesting. -- DannyH (WMF) (talk) 16:04, 12 December 2017 (UTC)

- How many people have actually complained in the 8 months or so that descriptions have now been disabled in mobile view? "readers and editors that use those descriptions": which editors would that be? Anyway, basically you are not going to interfere in content decisions, unless you don't like the result. But at the same time you can't be bothered to provide the necessary tools to patrol and control your features (and your first point is rather moot when this magic word goes live and works as requested anyway). Which is the same thing you did (personally and as WMF) with Flow, Gather, ... which then didn't get changed, improved, gradually accepted, but simply shot down in flames, at the same time creating lots of unnecessary friction and bad blood. Have you actually learned anything from those debacles? Most people here actually want to have descriptions, and these will be filled quite rapidly (likely to a higher percentage than what is provided now at Wikidata). But we will fill them where necessary, and we will leave them blank where we want them to be blank. You could have suggested over the past few months a compromise, where either "no magic word" or "magic word with no description" would mean "take the wikidata description", and the other meant "no description". You could have suggested "after the magic word is installed, we'll take a transitional period of three months, to see if the descriptions get populated here on enwiki; afterwards we'll disable the "fetch desc from wikidata" completely". Instead you insisted that the WMF would have the final say and would not allow blanks unless it was for a WMF-preapproved list of articles (or article groups). Why? No idea. If the WMF is so bothered that readers should get descriptions no matter what (even if many, many articles don't have Wikidata descriptions anyway in the first place), then they should hire and pay some people to monitor these and make sure that e.g. blatant BLP violations don't remain for hours or days. But forcing us to display non-enwiki content against our will and without providing any serious help in patrolling it is just not acceptable. Fram (talk) 15:44, 12 December 2017 (UTC)

- ok... I propose that from this point on, DannyH, User:Mike Peel and User:Fram, cease any further participation in this RfC. You three and your mutual disagreements are again completely dominating the discussion, the exact thing that the Arbcom case was warning against. This is NOT helping the result of this discussion. —TheDJ (talk • contribs) 14:24, 13 December 2017 (UTC)

- #3 – This makes the most sense to me for reasons I stated above. I would amend #3 only by saying: Immediately populate a local description for any pages being actively protected from vandalism which could just mean protected pages, or could mean (where appropriate) pages subjected to arbitration enforcement as well.--Carwil (talk) 18:02, 13 December 2017 (UTC)

- #1 This whole idea is just adding complexity over a rather small problem. The less duplication on the datas, the better. We should focus on ways to follow more project add glance and focus on better tools to follow change on Wikipedia rather than splitting the Wikimedian forces on all the different project. Co-operation and sharing are the essence of these projects, not control, defiance and data duplication. TomT0m (talk) 16:34, 15 December 2017 (UTC)

- #1 per DannyH. Additionally, we can configure protected articles to never display data from Wikidata. It's worth noting that this option allows you to run a bot that puts " " as description for a specific class of articles when you don't like the kind of descriptions that Wikidata shows for those articles. ChristianKl (talk) 15:29, 17 December 2017 (UTC)

- For clarification, Is your claim that we can configure protected articles to never display data from Wikidata based on knowing how this could be done, and that it is a reasonably easy thing to do, or a conjecture? Bear in mind how WMF is using the data on the mobile display. I ask because I do not know how they do it, so cannot predict how easy or otherwise it would be to block from Wikipedia side. Ordinary logic suggests that it may not be so easy, or it would already have been done. · · · Peter (Southwood) (talk): 04:44, 18 December 2017 (UTC)

- Without the magic keyword being active, it's not possible to easily prevent the import. However, once the feature is implemented you will be able to run a bot quite easily that creates "magic keyword = ' '" for every article that's protected or for other classes of articles where there's the belief that the class of article shouldn't import Wikidata and is better of with showing the user a ' ' instead of the Wikidata description.

- Additionally, I think the WMF should hardcode a limitation that once a Wikipedia semiprotects an article the article stops displaying Wikidata derived information. That would take some work on the WMF, but if that's what they have to do to get a compromise I think they would be happy to provide that guarantee. ChristianKl (talk) 12:31, 18 December 2017 (UTC)

- I would be both encouraged and a bit surprised to see WMF provide a guarantee. So far they have been very careful to avoid making any commitments to anything we have requested. I will believe it when I see it. I have no personal knowledge of the complexity of coding a filter that checks whether the article is protected or semi-protected and using that to control whether a Wikidata description is used that is fast and efficient enough to run every time that a short description may be displayed. but I would guess that this is an additional overhead that WMF would prefer to avoid. Requiring such additional software could also delay getting the magic word implemented, which would be a major step in the wrong direction. This needs to be simple and efficient, so the bugs will be minimised and speed maximised. Putting in a blank string parameter that displays as a blank string is easy and simple and requires no complicated extra coding. This can be done by any admin protecting an article where there is no local short description. · · · Peter (Southwood) (talk): 16:33, 18 December 2017 (UTC)

- ChristianKl, Wouldn't it make the most sense to produce a description for each protected article, rather than produce a blank? We're talking about a considerable shorter list than all articles here. Then we would have functionality (what WMF says they want) and protection from vandalism on Wikidata.--Carwil (talk) 15:23, 19 December 2017 (UTC)

- I agree with you that writing description in those cases makes sense, but the people who voted #2 seem to have the opinion that this isn't enough protection from vandalism on Wikidata and don't want that Wikidata content is shown even if the 'magic word' is empty. ChristianKl (talk) 17:55, 19 December 2017 (UTC)

- ChristianKl, Wouldn't it make the most sense to produce a description for each protected article, rather than produce a blank? We're talking about a considerable shorter list than all articles here. Then we would have functionality (what WMF says they want) and protection from vandalism on Wikidata.--Carwil (talk) 15:23, 19 December 2017 (UTC)

- I would be both encouraged and a bit surprised to see WMF provide a guarantee. So far they have been very careful to avoid making any commitments to anything we have requested. I will believe it when I see it. I have no personal knowledge of the complexity of coding a filter that checks whether the article is protected or semi-protected and using that to control whether a Wikidata description is used that is fast and efficient enough to run every time that a short description may be displayed. but I would guess that this is an additional overhead that WMF would prefer to avoid. Requiring such additional software could also delay getting the magic word implemented, which would be a major step in the wrong direction. This needs to be simple and efficient, so the bugs will be minimised and speed maximised. Putting in a blank string parameter that displays as a blank string is easy and simple and requires no complicated extra coding. This can be done by any admin protecting an article where there is no local short description. · · · Peter (Southwood) (talk): 16:33, 18 December 2017 (UTC)

- For clarification, Is your claim that we can configure protected articles to never display data from Wikidata based on knowing how this could be done, and that it is a reasonably easy thing to do, or a conjecture? Bear in mind how WMF is using the data on the mobile display. I ask because I do not know how they do it, so cannot predict how easy or otherwise it would be to block from Wikipedia side. Ordinary logic suggests that it may not be so easy, or it would already have been done. · · · Peter (Southwood) (talk): 04:44, 18 December 2017 (UTC)

- #1 Most of the time, for most purposes, the Wikidata descriptions are fine. #1 gives a sensible over-ride mechanism for cases where there is particular sensitivity. Jheald (talk) 20:55, 17 December 2017 (UTC)

- Unless you have a reasonably robust analysis to base this prediction on, it is speculation. However it will make little difference in the long run, as it will be easy to override the Wikidata descriptions through the magic word. It is just a question of how tedious it would be, depending on what proportion of 5.5 million will have to be done.· · · Peter (Southwood) (talk): 04:44, 18 December 2017 (UTC)

- #2 Agree with Alsee. Most sensible. Wikidata entries can be imported initially, if needed. It's easiest if things are kept on the same area, with the same community and policies. I really don't see the advantage of having things on Wikidata - the description is different for every language. Galobtter (pingó mió) 11:54, 19 December 2017 (UTC) Peter Southwood makes excellent practical points -

an interim measure, to get things moving more quickly, I see some value in initially displaying the Wikidata description as a default for a blank magic word parameter, as it is no worse than what WMF are already doing

too. Galobtter (pingó mió) 11:58, 19 December 2017 (UTC) Addendum, #5 is basically it, yeah. What matters is in the long-run it's there here. Galobtter (pingó mió) 07:21, 10 January 2018 (UTC)

- Galobtter—Wikidata has a data structure that maintains separate descriptions for each language (or at least each language with a Wikipedia).--Carwil (talk) 15:23, 19 December 2017 (UTC)

- I know that, what I'm saying is why have all that when it's mostly useful only to that language wikipedia. Other data on wikidata is useful across wikipedias - raw numbers, language links, etc Galobtter (pingó mió) 15:27, 19 December 2017 (UTC)

- If the description is defined in the article text, then it can only be used in that article, on that language Wikipedia. If the description is on Wikidata, then you can access it from other articles (e.g., list articles), and places like Commons (descriptions for categories on the same topic as the articles), or on Wikivoyage etc. If it's in a data structure like on Wikidata, then it's a lot more easy to automatically reuse it than if it's embedded in free-form text. Thanks. Mike Peel (talk) 15:34, 19 December 2017 (UTC)

- The description on Wikidata will remain available, if other projects or environments want to use it and think it suits their purpose (and can better access it than Enwiki magic words). This is rather out-of-scope for this discussion though, which won't change anything on Wikidata. Fram (talk) 15:47, 19 December 2017 (UTC)

- If the description is defined in the article text, then it can only be used in that article, on that language Wikipedia. If the description is on Wikidata, then you can access it from other articles (e.g., list articles), and places like Commons (descriptions for categories on the same topic as the articles), or on Wikivoyage etc. If it's in a data structure like on Wikidata, then it's a lot more easy to automatically reuse it than if it's embedded in free-form text. Thanks. Mike Peel (talk) 15:34, 19 December 2017 (UTC)

- I know that, what I'm saying is why have all that when it's mostly useful only to that language wikipedia. Other data on wikidata is useful across wikipedias - raw numbers, language links, etc Galobtter (pingó mió) 15:27, 19 December 2017 (UTC)

- Galobtter—Wikidata has a data structure that maintains separate descriptions for each language (or at least each language with a Wikipedia).--Carwil (talk) 15:23, 19 December 2017 (UTC)

- 2 because Wikidata is shite and shouldn't ever be used regardless of how good it is in some articles, WMF have had ample oppertunity to patrol that site and do something about it however instead they've ignored requests time and time again so it's about time we did something ourselves, Anyway back on point using blanks is better - If someone adds a silly description they'll be reverted and no doubt one will be added. –Davey2010 Merry Xmas / Happy New Year 23:31, 27 December 2017 (UTC)

- Combination of 2 and 1: Wikidata descriptions are off (except for maybe a brief run in) but will be possibly shown when ONLY changes to them can occur in the watchlist. Doc James (talk · contribs · email) 06:24, 30 December 2017 (UTC)

- 2, because we need complete editorial control over this type of content. Alternatively, #5 as a compromise. Tony Tan · talk 04:21, 17 January 2018 (UTC)

- I am convinced that vandalism affects how brief descriptions in search results are displayed, making me reluctant to vote for #1. True, showing no descriptions would be less helpful for readers searching for lesser known topic with less recognizable titles/names. Nevertheless, I see the consensus favoring local control over central control. #5 is nice, but switching Wikidata descriptions off is not helpful. Rather there would be too much local work manually and/or by bot. Whether #2 or #5, local descriptions would be vandalized (unless protected in some sort of way?) in the same way Wikidata has been vandalized. Well, let's go for #2 for most people per others. Either also or alternatively, how about #4 - Have options #1 and #2 via user preferences, but use #2 as default choice? If anyone wants to use Wikidata descriptions, why not provide the option to "user preferences"? George Ho (talk) 12:23, 24 January 2018 (UTC)

Filling in blanks: option #5