Talk:Orthogonality principle

| This article is rated C-class on Wikipedia's content assessment scale. It is of interest to the following WikiProjects: | |||||||||||

| |||||||||||

Dirac

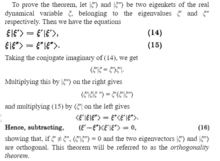

[edit]In Dirac's The Principles of Quantum Mechanics, he exposits what he calls the "Orthogonality Theorem" on page 32. The theorem states "two eigenvectors of a real dynamical variable belonging to different eigenvalues are orthogonal." Dirac provides a proof of this theorem on that same page. I am uncertain about where this information belongs, or how to include it.

— Preceding unsigned comment added by SpiralSource (talk • contribs) 11:19, 16 February 2022 (UTC)

Non-Bayesian

[edit]I have partially reverted Melcombe's last edits. As far as I can tell, the orthogonality principle applies only to Bayesian estimators; it seems to me that it does not make sense when there is no prior. Concerning the second edit, while the condition can be viewed as a Bayesian version of unbiasedness, this is a rather unusual definition, and I think that adding it into the sentence is more confusing than useful. I hope I made myself clear and please feel free to continue the discussion if you think I'm wrong. --Zvika (talk) 17:22, 19 March 2009 (UTC)

- (i)There is no sign of a "prior" in this article.

- (ii)If there is no assumption of unbiasedness, or more likely in this context that the means are zero, then the statement (i.e. "if and only if ...") is wrong, as are the supposed derivations later on in the article. The true minimum mean square error estimate would depend on the means of the y’s, and is not unbiased. Of course you might like to have invariance rather than unbiasedness.

- Melcombe (talk) 10:25, 20 March 2009 (UTC)

- (i) The statement "let x be an unknown random vector" is meant to imply that x has a prior distribution, for otherwise it would be a deterministic unknown.

- (ii) I disagree. Possibly you think this because Michael Hardy removed the constant c from the discussion in the article; I have restored that version. I will add an example to the article which I hope will clear things up. --Zvika (talk) 12:50, 20 March 2009 (UTC)

Unclear

[edit]The linear estimator section is at best unclear. Seldom does one speak of estimating a random vector, but Bayesian estimation of a vector of parameters would treat the vector of parameters as what frequentists would call a random vector. In a frequentist context, one might speak of predicting a random vector.

I'll be back.... Michael Hardy (talk) 17:55, 19 March 2009 (UTC)

- Could you explain what you find unclear? The context is Bayesian and not frequentist, and the goal is to estimate one random vector from another. For example, we might have an unknown vector X and we might have measurements Y=X+W, where W is a random noise vector. Both X and Y are then random. This is a standard setting in signal processing. See the reference given in the article. --Zvika (talk) 08:46, 20 March 2009 (UTC)

- I have to agree with Michael Hardy about clarity about X. It seems that the articles Minimum mean square error and Mean squared error only deal with scalar quantities. It may be that you only want minimum mean square error on a one element at a time basis. Otherwise you would need to think about measures of the size of mean square error matrices. Melcombe (talk) 15:56, 20 March 2009 (UTC)

- The context is not specifically Bayesian, and not Bayesian at all in any real sense. If it were, there would be some representation of some unknown parameters and some conditioning on observations (and, for generality, observations besides those used at the stage of "estimation" dealt with in this article). Instead all that is being done is to assume that there is some joint distribution of the x and the y whose properties are known. Since no real "inference" is being done, there is no point in trying to distinguish between Bayesian and frequentist. In more general cases where someone might apply Bayes' theorem to derive a conditional distribution, that doesn't make it a Bayesian analysis (as the term is usually used). Melcombe (talk) 10:17, 20 March 2009 (UTC)

- True, there is no specification of a "prior" and "conditional". Nevertheless, the fact that x and y have a joint distribution means that one could find the prior (= the marginal of x) and the conditional, one simply did not formulate the problem with that terminology in mind. I still think the problem qualifies as Bayesian estimation. And, yes, there is an inference here: the estimation of x from y. --Zvika (talk) 13:27, 20 March 2009 (UTC)

- It may be a Bayesian estimation, but it is not only valid in a Bayesian context. In many cases the required distribution for x would be a thing defined by a model, for example a stationary distribution in time series analysis, or a model that has been fitted to more extensive data. Any frequentist would be perfectly happy with the manipulations here, including those necessary to derive this so-called "othogonality principle". It would only be a "Bayesian estimate", in the sense that distinguishes it from a frequentistic estimate (which seems to be what is implied), if you were treating quantities which are definitely non-random to a frequentist as if they are random variables. For example, both x and y might be daily rainfall totals at collections of sites, with y being those sites where observations happen to have been taken. A simple spatial correrlation function could be fitted to data for a long period and a smooth map of mean rainfalls could be formed similary: these can be used to determine the required joint distribution of x and y . A frequentist would be perfectly happy with this approach to forming interpolated values of rainfall, although a meteorological practioner might want to ensure that estimates can never be negative. Melcombe (talk) 15:56, 20 March 2009 (UTC)

- It sounds to me like our difference of opinion is more in terminology than content. To me, a Bayesian estimator is one which minimizes the posterior expected loss, implying that there is a posterior (and hence a prior). If the loss is squared error, then the resulting estimator is At least where I come from, this is the standard definition. What is the origin of the prior? Well, that is a more philosophical than mathematical question. I could settle for a different name but I think the basic idea -- that we must have a prior -- needs to be explained here. --Zvika (talk) 18:28, 21 March 2009 (UTC)