Draft:Retrieval Augmented Generation (RAG)

| Submission declined on 3 May 2024 by ToadetteEdit (talk). This submission is not adequately supported by reliable sources. Reliable sources are required so that information can be verified. If you need help with referencing, please see Referencing for beginners and Citing sources.

Where to get help

How to improve a draft

You can also browse Wikipedia:Featured articles and Wikipedia:Good articles to find examples of Wikipedia's best writing on topics similar to your proposed article. Improving your odds of a speedy review To improve your odds of a faster review, tag your draft with relevant WikiProject tags using the button below. This will let reviewers know a new draft has been submitted in their area of interest. For instance, if you wrote about a female astronomer, you would want to add the Biography, Astronomy, and Women scientists tags. Editor resources

|  |

| Submission declined on 8 February 2024 by Curb Safe Charmer (talk). The proposed article does not have sufficient content to require an article of its own, but it could be merged into the existing article at Prompt engineering. Since anyone can edit Wikipedia, you are welcome to add that information yourself. Thank you. Declined by Curb Safe Charmer 9 months ago. |  |

Comment: The RAG method does not alter the user prompt; therefore it is not relevant in an article focused on altering prompts (prompt engineering). numiri (talk) 02:30, 16 February 2024 (UTC)

Comment: The RAG method does not alter the user prompt; therefore it is not relevant in an article focused on altering prompts (prompt engineering). numiri (talk) 02:30, 16 February 2024 (UTC)

Comment: Retrieval-augmented generation currently redirects to a section on that subject in the prompt engineering article. The redirect could be replaced with an article, but only once it substantially expands on what is already written on the subject in the prompt engineering article. Curb Safe Charmer (talk) 15:41, 8 February 2024 (UTC)

Comment: Retrieval-augmented generation currently redirects to a section on that subject in the prompt engineering article. The redirect could be replaced with an article, but only once it substantially expands on what is already written on the subject in the prompt engineering article. Curb Safe Charmer (talk) 15:41, 8 February 2024 (UTC)

This article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

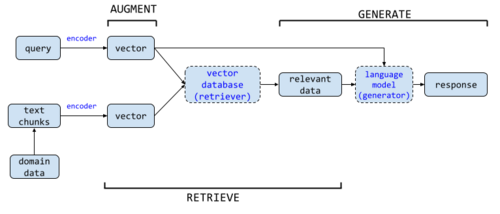

Retrieval Augmented Generation (RAG) is a neural network method for enhancing language models with information unseen during training so it can perform tasks such as answering questions using that added information [1]. In its original 2020 form [2], the weights in a neural network of a RAG system do not change. In contrast, neuronal weights do change for other Language Model enhancement methods like Fine Tuning . In RAG, a body of new information is vectorized, and select portions are retrieved when the network needs to generate a response.

The problems that RAG addresses are information staleness and factual accuracy (some times called grounding or hallucinations).

Techniques

[edit]Improvements to the Response can be applied at different stages in the RAG flow.

Encoder

[edit]These methods center around the encoding of text as either dense or sparse vectors. Sparse vectors, which encode the identity of a word, are typically dictionary length and contain almost all zero's. Dense vectors, which aims to encode meaning, are much smaller contain much fewer zero's.

- several enhancements can be made in the way similarities are calculated in the vector stores (databases). Performance can be improved with faster dot products, approximate nearest neighors, or centroid searches.[3] Accuracy can be improved with Late Interactions [4]

- hybrid vectors. combine dense vector representations with sparse 1-hot vectors, so that the faster sparse dot products can be used rather than dense ones.[5] Other methods can combine sparse methods (BM25, SPLADE) with dense ones like DRAGON.

Retriever-centric methods

[edit]- pre-train the retriever using the Inverse Cloze Task.[6]

- progressive data augmentation. The method of Dragon samples difficult negatives to train a dense vector retriever.[7]

- Under supervision, train the retriever for a given generator. Given a prompt and the desired answer, retrieve the top-k vectors, and feed those vectors into the generator to achieve a perplexity score for the correct answer. Then minimize the KL-divergence between the observed retrieved vectors probability and LM likelihoods to adjust the retriever.[8]

- use reranking to train the retriever.[9]

Language model

[edit]By redesigning the language model with the retriever in mind, a 25-times smaller network can get comparable perplexity as its much larger counterparts [10]. Because it is trained from scratch, this method (Retro) incurs the heavy cost of training runs that the original RAG scheme avoided. The hypothesis is that by giving domain knowledge during training, Retro needs less focus on domain and can devote its smaller weight resources only on language semantics. The redesigned language model is shown here.

It has been reported that Retro is not reproducible , so modifications were made to make it so. The more reproducible version is called Retro++ and includes in-context RAG.[11]

Chunking

[edit]Converting domain data into vectors should be done thoughtfully. It is naive to convert an entire document into a single vector and expect the retriever to find details in that document in response to a query. There are various strategies on how to break up the data. This is called Chunking.

References

[edit]- ^ ""What Is Retrieval-Augmented Generation"". blogs.nvidia.com. 15 November 2023.

- ^ Lewis, Patrick; Perez, Ethan (2020). ""Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks"" (PDF). proceedings.neurips.cc.

- ^ "faiss". GitHub.

- ^ Khattab, Omar; Zaharia, Matei (2020). "ColBERT: Efficient and Effective Passage Search via Contextualized Late Interaction over BERT". pp. 39–48. doi:10.1145/3397271.3401075. ISBN 978-1-4503-8016-4.

- ^ Formal, Thibault; Lassance, Carlos; Piwowarski, Benjamin; Clinchant, Stéphane (2021). ""SPLADE v2: Sparse Lexical and Expansion Model for Information Retrieval"". Arxiv. S2CID 237581550.

- ^ Lee, Kenton; Chang, Ming-Wei; Toutanova, Kristina (2019). ""Latent Retrieval for Weakly Supervised Open Domain Question Answering"" (PDF).

- ^ Lin, Sheng-Chieh; Asai, Akari (2023). ""How to Train Your DRAGON: Diverse Augmentation Towards Generalizable Dense Retrieval"" (PDF).

- ^ Shi, Weijia; Min, Sewon (2024). "REPLUG: Retrieval-Augmented Black-Box Language Models". "REPLUG: Retrieval-Augmented Black-Box Language Models". pp. 8371–8384. arXiv:2301.12652. doi:10.18653/v1/2024.naacl-long.463.

- ^ Ram, Ori; Levine, Yoav; Dalmedigos, Itay; Muhlgay, Dor; Shashua, Amnon; Leyton-Brown, Kevin; Shoham, Yoav (2023). ""In-Context Retrieval-Augmented Language Models"". Transactions of the Association for Computational Linguistics. 11: 1316–1331. doi:10.1162/tacl_a_00605.

- ^ Borgeaud, Sebastian; Mensch, Arthur (2021). ""Improving language models by retrieving from trillions of tokens"" (PDF).

- ^ Wang, Boxin; Ping, Wei (2023). ""Shall We Pretrain Autoregressive Language Models with Retrieval? A Comprehensive Study"" (PDF).